What is Kubernetes?

Find complete knowledge about the Kubernetes system, how Kubernetes work, and how it will benefit your business applications.

Are you looking for an automatic operational management system for your applications? If Yes, Kubernetes can be your choice and, combined with AppMaster, will help the application hosting using a no-code platform and Kubernetes features. But first, it is essential to know what Kubernetes is, how its digital transformation is significant and how it works.

Kubernetes is also referred to as a short form K8s or Kube. It ultimately helps in the automation of your application, and every command present inside the deploying applications, like proceeding with the changes, and scaling parameters by digital transformation, requires needs, keeping an eye on the health of your application, and whatnot, gets easier with Kubernetes. Moreover, Kubernetes is an open-source application automating software for deployment and management. Bundled with their reliances and arrangements, containers are often used to build modern applications.

What are Kubernetes clusters?

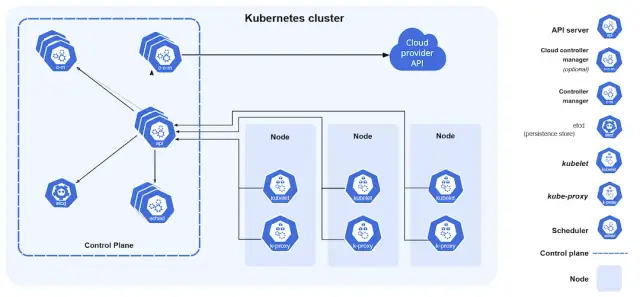

A bunch of node devices operated to handle scaling containerized applications, also known as Kubernetes clusters. A Kubernetes cluster must contain a control plane and one or more nodes, which are computing devices. That control plane then must support the cluster's preferred form, including the active enterprise apps and the container pictures they employ. Nodes manage workloads and enterprise apps or containerized applications.

The capacity to organize and manage containers across an assemblage of computers might be virtual machines, that is, in the cloud build or physical, that is, on-premises; both lie at the root of Kubernetes' benefit. Containers in Kubernetes aren't tied to specific devices. They are somewhat separated throughout the cluster.

Who contributes to Kubernetes?

Engineers at Google created and initially developed Kubernetes. Google has openly discussed how everything is run in containers within the company and was one of the pioneers in developing Linux container technology. (This technology underlies Google's cloud-build offerings.)

Google's internal platform, Borg, powers over 2 billion container deployments weekly. The digital transformation and innovation behind a large portion of Kubernetes were developed using the lessons learned while constructing Borg, Kubernetes' forerunner.

What can you do with Kubernetes?

You can do numerous things with the Kubernetes system that push the impossible to handle and be a digital transformation and digital innovation for Kubernetes clusters to work automatically, orchestrate containers across numerous innholders, and optimize help usage by constructing more acceptable use of infrastructure and interface.

Rollouts and rollbacks

Kubernetes deployment allows you to gradually deploy modifications in your application's configuration or code while keeping track of your application health to prevent mass instance termination. Kubernetes deployment will roll back the modification if something goes inaccurate happens. Profit from the expanding ecosystem of deployment strategies.

Load balancing

Your application doesn't need to be changed to use an unknown service discovery mechanism. Pods are given unique IP addresses by Kubernetes, allowing for load balancing across a group of Pods under a single DNS name.

Depository orchestration

Automatically ascends the repository system of your preference, whether a provincial storehouse, a web storage technique like iSCSI, Cinder, NFS, or Ceph or a general cloud build provider like AWS.

Configuration surveillance

Deploy and correct enigmas, application arrangement, and formatting without reconstructing the image or revealing secrets in your stack composition.

Bin packing

Automatically positions containers while maintaining availability based on resource needs and other constraints. Combine critical and best-effort workloads to increase utilization and save even more resources.

Batch execution

Kubernetes can handle your container set and CI workloads and deliver assistance, returning failed containers if required.

Horizontal scaling

You can quickly scale your application up or down using a command, a user interface, or automatically based on CPU usage.

Self-healing

It kills containers that don't respond to your user-defined health check, restarts failed containers, replaces and reschedules containers when nodes die, and doesn't advertise containers to clients until they are prepared to serve.

Designed for extensibility

Due to its high extensibility, you can add features to your Kubernetes cluster without altering the upstream source code.

IPv4 and IPv6 dual-stack

Issuance of pods or services like IPv4 and IPv6 addresses.

How does Kubernetes work?

The Kubernetes cluster is a functional Kubernetes setup. The control plane and the compute nodes, or machine learning, can be seen as the two distinct components of a Kubernetes cluster. Each node, which can be a physical or virtual system, has its own Linux environment. Pods, which are composed of containers, are executed by each node. The control plane must maintain the cluster's desired state, including the active applications and the container images they utilize. Containerized applications and workloads are run on computing machine learning.

Operating system (such as Enterprise Linux) that Kubernetes runs on top of. It communicates with pods of running containers on the nodes. The Kubernetes control plane delivers the commands to the compute machine learning after receiving them from an administrator (or DevOps team). This handoff uses a variety of services to determine which node is most appropriate for the task automatically. The desired task is subsequently assigned to the node's pods when allocated resources.

The desired state of a Kubernetes cluster specifies which workloads or apps should be running together with the images they should use, the resources they should access, and other similar configuration information. Little has changed in terms of infrastructure when it comes to managing containers. Simply put, you have more control over containers since you can manage apps at a higher level without handling every individual container or node.

You are responsible for defining Kubernetes' nodes, pods, and the containers that reside inside them. The containers are orchestrated using Kubernetes. It's up to you where you run Kubernetes. This can be done on physical servers, virtual machine learning, public clouds, private clouds, and hybrid clouds. The fact that Kubernetes API runs on a variety of infrastructure types is one of its main benefits.

Is Kubernetes the same as Docker?

There is a set of software development tools called Docker to build, share, and execute individual containers. Kubernetes is a solution for implementing containerized apps at scale. Consider containers as standardized packaging for microservices that contains all the necessary dependencies and application code. Docker is in charge of constructing these containerized apps. Anywhere a container may run, including local servers, hybrid cloud-native technologies, laptops, and even edge devices.

There are numerous containers in modern applications. Kubernetes API is in charge of running them in actual production. Containerized applications can auto-scale by expanding or decreasing processing capacities to meet user requests because replicating containers is simple. The majority of the time, Kubernetes and Docker are complementary technologies. However, Docker also offers a solution known as Docker Swarm - Kubernetes vs DockerSwarm - for running large-scale containerized applications.

What is Kubernetes-native infrastructure?

The collection of resources (including servers, real or virtual machine learning, hybrid cloud-native platforms, and more) that underpin a Kubernetes environment is known as Kubernetes infrastructure. The process of automating many operational operations necessary for a container's lifetime, from deployment to retirement, is known as container orchestration. One well-liked open-source platform for this is Kubernetes.

Under the hood, Kubernetes' infrastructure and architecture are based on the idea of a cluster, which is a collection of computers referred to as "nodes" in that language. Kubernetes API allows you to deploy containerized workloads onto the cluster. Nodes are the computers that execute your containerized workloads, which could be actual or virtual machines. Although a cluster often has multiple or more worker nodes, every Kubernetes cluster contains a controller node and at least one of these worker nodes.

The "pod" is another crucial Kubernetes notion; according to the official documentation, it's the smallest deployable unit and runs on the cluster's nodes. Put another way, the pods stand in for the various parts of your application. Although it can occasionally run more than one container, a pod typically only runs one.

The control plane is yet another essential component of the Kubernetes cluster architecture. This consists of the API server and four additional elements that efficiently manage apps and your nodes (or machines) by the state you need.

What are the benefits of Kubernetes-native infrastructure?

There are many benefits of enterprise Kubernetes native infrastructure, some of which are listed below.

- Agility

Agility and simplicity of the public, hybrid cloud native technologies on-premises to lessen conflict between IT operations and developer productivity.

- Cost efficient

You can save a lot of money and make your business cost-effective as much as you can. It saves money by not requiring a separate hypervisor layer to run VMs.

- Flexible

Enterprise Kubernetes allows developer productivity to deploy containers, serverless enterprise apps, and VMs, scaling applications and infrastructure that makes it most flexible.

- Extensibility

The extensibility of the hybrid cloud-native using Kubernetes as the foundational layer for both private and public clouds makes it the most extensible.

Why do you need Kubernetes?

You can distribute and manage containerized, legacy, cloud-native, and enterprise apps that are being refactored into microservices with the aid of Kubernetes. Your app development team must be able to quickly develop new applications and services to satisfy shifting company needs. Beginning with microservices in containers, cloud-native app development enables quicker app development and makes it simpler to convert and optimize existing programs. Multiple server hosts must be used to deploy the containers that makeup production enterprise apps. You have the orchestration and management tools you need with Kubernetes to deploy containers for these workloads at scale.

Real-time use case

Let's say you created a tool for digital transformation and online shopping. And you intended to use a Docker container to deploy this application. You made a Docker image for the application and deployed the picture as a Docker container. Everything is operating smoothly. As a result of the application's growing popularity, your customer base has grown. Due to high application demand, your server crashes. Right now, you have a cluster setup planned. So, you produced five (5) instances of the application using Docker on a single computer. The server can easily handle the traffic now that the load has been spread. Once more, more people are using your application. One computer cannot support five instances at once. You intended to add more computers to the Docker container cluster. The real issue arises right here.

- On a single computer, Docker containers may easily communicate. It cannot, however, replicate itself across many computers.

- Replication requires some effort. To make an N instance, we must rework it.

- Docker is unable to determine whether an active container has crashed.

- We have to restart the container if one crashes manually. It is not capable of self-healing.

As mentioned earlier, we require a container orchestration solution to solve the issue. Kubernetes is that. Tools for container orchestration are plentiful. But a lot of devs use Kubernetes. An application for clusters is Kubernetes. Similar to the master and secondary nodes. The worker nodes will execute Docker containers. A controller node key-value store of meta-data about the Docker containers that are currently operating.

Kubernetes and DevOps

App development and operations teams are combined into one group through the software development technique known as DevOps. An open-source orchestration technology called Kubernetes was created to assist you in managing container deployments at scale. However, there is a link between Kubernetes and DevOps.

Main points

Kubernetes is excellent for developing, deploying, and expanding enterprise apps and DevOps pipelines due to its features and capabilities. Thanks to these capabilities, teams can automate the manual work that orchestration requires. Teams need this automation to boost output or, more significantly, quality.

You may build your entire infrastructure with Kubernetes. Kubernetes can access your tools and applications, including databases, ports, and access controls. Environment configurations can also be managed as code. When deploying a new environment, you don't always need to run a script; instead, you can give Kubernetes a source repository holding the configuration files.

When orchestrating your pipeline with Kubernetes, you may handle fine-grained controls. This permits you to restrict specific roles or applications' ability to do particular activities. For instance, you restricted testers to builds while limiting customers to deployment or review processes.

Developers can build infrastructure on demand with Kubernetes' self-service catalog functionality. This covers cloud build services made available through open service and API server standards, such as AWS resources. These services are based on the settings that operations members are permitted to use, which helps to maintain security and compatibility.

You can deploy new releases of Kubernetes resources without any downtime thanks to its automated rollback and rolling upgrades features. You can use Kubernetes to distribute traffic among your accessible services, upgrading one cluster at a time rather than having to shut down production environments and re-deploy updated ones. You can efficiently complete blue/green deployments thanks to these features. Additionally, you may do A/B testing to ensure that product features are desired and prioritize new client features more quickly. In conclusion, Kubernetes and DevOps are not a perfect fit, but Kubernetes may be a very effective tool with a suitable configuration. Just be careful not to get sucked in too deeply and realize that K8s is not a universal fix.

AppMaster and Kubernetes

AppMaster is a no-code platform that allows app development and all kinds of applications. It helps host the user applications in Kubernetes, which will further make enterprise apps and the management of these apps easier and better.

The bottom line

Since Kubernetes is open source, there isn't an established support system for it, at least not one you'd feel comfortable having your company rely on. You would probably feel annoyed if there was a problem with your Kubernetes setup when it was being used in production.

Imagine Kubernetes as an automobile engine. Although an engine can function independently, it only becomes a part of a working vehicle when coupled with a transmission, axles, and wheels. Installing Kubernetes to create a platform fit for production is insufficient. For Kubernetes to operate at its maximum potential, additional parts are required. Tools for networking, security, monitoring, log management, and authentication must be added.

That's where AppMaster- the whole car - comes in. Kubernetes for businesses is AppMaster. It incorporates all the different technologies, including registry, networking, telemetry, security, automation, and services, that make Kubernetes solid and practical for the workplace.

Your developers can create brand-new web and enterprise apps from scratch, host them, and deploy them in the cloud-native with the scalability, authority, and orchestration required to turn a good concept into a new business swiftly. This is all made possible by the AppMaster.

Utilizing the most recent no-code technology and drag-and-drop container runtime interface with a robust backend, you may try using AppMaster to automate your container operations with the Kubernetes project and create your mobile or web application from scratch.