Docker Container Overview

What is Docker, what is its architecture, and why should you use it? Learn to build and deploy distributed applications easily to the cloud with Docker.

The software development world is growing and expanding so fast that new platforms and tools become popular every day. Docker is one of them, and it is paving the way for a new generation of developers. In this article, we'll explore what Docker and Docker Container as well as their benefits, how to use them, and other details is. So, without further delay, let's dig deep into our Docker Container overview.

If it sounds too complex for you and your level of knowledge, read until the end because, at the bottom of the article, we're also providing a simpler no-code alternative that could be more suitable to beginners, citizen developers, and anyone who needs to develop an application most easily and quickly.

What is a Docker Container?

Before discussing what Docker Container is, we need to take a step back and speak about what Docker is. Docker is an open-source platform for developers. With Docker, the application can be packaged, with all its dependencies, in the form of containers. With this system, every application can work in an isolated environment: each container is independent and contains the application with its dependencies and libraries. Applications, this way, won't interfere with one another, and the developer can work on each of them independently. Docker Containers are also important and helpful when it comes to teamwork.

A developer, for example, could develop a container and pass it on to the team. The team would be able to run the container replicating the entire environment developed by the first programmer.

Docker Architecture

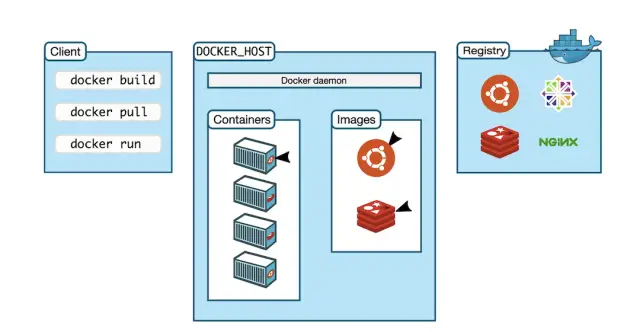

Docker deploys a client-server architecture that works with the following "bricks":

- The Docker Daemon: it listens to the Docker Client's requests and manages the objects such as containers and images, and volumes and networks.

- The Docker Client: it is how the user interacts with the Docker platform.

- Docker hub: it's a registry (registries store images, see below) that is public, meaning that any Docker user can access it and search for images in there. Docker would default look for images in the Docker Hub, but you could also set up and use a private registry.

- Docker images: they are templates for creating containers. Container images can be based on other container images with some customization. Other than using images created by other users and available on the public registries, you can create your images and store them in your private registry or share them in a public one.

- Docker containers: they are the runnable instances of the container images. Every running container is well isolated from all the others, but you could also assemble them to create your application. Containers can be seen as isolated running software or as building blocks of the same application.

Now that you know what everything is and does, you can better understand the Docker Architecture: we have the Docker Client that "talks" to the Docker Daemon, which is the one that deals with building and running the Docker Containers. The Docker Client, in particular, communicates to the Docker Daemon using a REST API over a network interface or UNIX sockets. One Docker Client can communicate with more than one Docker Daemon.

Why do we need a Docker container?

The principle which is the basis of Docker Containers and their deployment is containerization. In order to understand its importance and advantages, it could be useful to describe a developing scenario without containerization.

Before Containerization

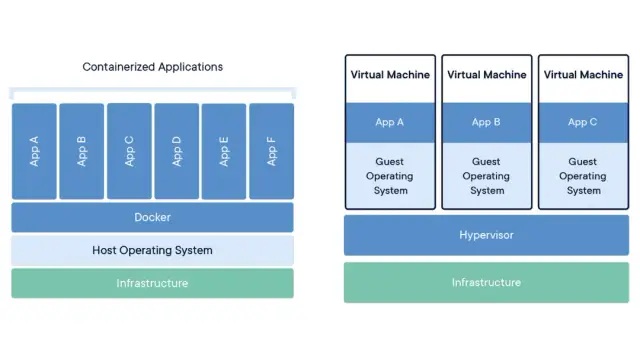

Developers have always wanted to work on applications in isolated environments so that they won't interfere with one another. Before containerization, the only way of isolating applications and their dependencies was to place each of them on a separate virtual machine.

This way, the applications run on the same hardware; the separation is virtual. Virtualization, however, has many limits (especially as we are about to discover when we compare it to containerization). First of all, virtual machines are bulky in size. Second, running multiple virtual machines makes the performance of each of them unstable.

There are also other problems related to the use of virtual machines when it comes to updates, portability, and integrations, and the bootup process can sometimes be very time-consuming. These problems pushed the developers' community to create a new solution. And the new solution is containerization.

Containerization

Containerization is also a type of Virtualization, but it brings the Virtualization to the operating system level. It means that while Virtualization, with the use of virtual machines, creates virtual hardware, containerization creates a virtual operating system.

Unlike virtual machines, containers are more efficient because:

- They utilize a host operating system and, therefore, there is no guest operating system;

- They share relevant libraries and resources when needed, which makes the execution very fast.

- Because all the containers share the same host operating system, the bootup process is also extremely fast (it can require a few seconds!).

In other words, with containerization, we have a developing environment structure that looks like this:

- a shared host operating system at the base

- a container engine

- containers that only contain their application's specific libraries and dependencies and that are completely isolated from one another.

While with virtual machines, the structure would be the following:

- a host operating system kernel;

- a (separated) guest operating system for each of the applications;

- the different applications with their libraries and dependencies.

As we mentioned, the major difference between the two systems is the absence of a guest operating system on the Containerization model, which makes all the difference.

The benefits of Docker

Docker Container is a platform that allows you to exploit containerization as we've described it. If we had to put together all the benefits it has for developers, they would be the following.

Isolated Environment & Multiple containers

Docker not only allows you to create and set up containers that are isolated from one another and capable of working without disturbing each other, but it also allows you to set up multiple containers at the same time and on the same host. Each of the multiple containers is allowed to access only the assigned resources. Furthermore, the process of eliminating an application you don't need anymore is also easier: you only need to eliminate its container.

Deployment speed

Being structured as it is (see the previous paragraph), Docker makes the application deployment process a lot faster (compared to the alternative, which is using virtual machines). The reason why Docker Containers can perform so well is that Docker creates a different container for every process so that the Docker Containers don't boot into an operating system.

Flexibility & Scalability

Docker Container makes the process of making changes to your applications a lot easier. It is because when you need to intervene on an application, you can simply access its container, and it won't affect all the others in any way. The Docker Compose command tool (see the following paragraph) enhances flexibility and scalability in impossible ways with any other application development approach.

Portability

The applications created within the software containers are extremely well portable. The Docker Containers can run on any platform as long as the host operating system supports Docker. When you have created your application within the container, you can move it to any platform that supports Docker, and it will perform similarly on all of them.

Security

Docker Containers enhanced security because:

- One application (and its possible problems) doesn't affect any other.

- The developer has complete control over the traffic course.

- Each running container is assigned a separate set of resources.

- An application can't access the data of another application without authorization.

What is Docker Compose?

Docker Compose is a Docker command that brings the "power" of Docker and Containerization to another level. With this tool, your application development process can become way faster and easier. The Docker Compose tool is a command line tool and what it does is take multiple containers and assemble them into an application. The application can then be run on a single host.

With Docker Compose, you have the possibility to divide your complex application development project into smaller ones. You can work on the different aspects separately, and you can finally assemble them to create your final web app or other application.

Using Docker Compose also means that you will be able to use the container you've created for this project in other different projects. It also means that when you need to update a single aspect, you can work on it without affecting the entire application development project.

Steps to Using Docker Container

By reading this article, you've already moved your first steps into the world of application development with Docker. You can't use such a powerful yet complex tool if you don't have knowledge about all its aspects. Now, once you have Docker installed on your computer (it is available for Mac, Windows, and Linux), your next steps are the following.

- How to build and run containers

- How to deploy applications

- How to run an application using Docker Compose

One of the many advantages of using Docker is that there is detailed documentation available online, and it's redacted and published by the Docker team themselves (so it's very reliable). You can fully relate to their documentation at the beginning and during your application development journey. However, here, we'd like to recommend starting with these two steps:

Start the tutorial

Docker has a built-in tutorial for new users. To launch it, open a command prompt and type this command (you can copy and past it):

Docker run -d -p 80:80 docker/getting-started

There are a few things that can be learned by only taking a look at this command:

- the "-" run the container in the background;

- -p 80:80 maps port 80 on the host to port 80 on the container;

- docker/getting-started specifies the image to use.

Understand the dashboard

Just after you've gone through the tutorial and before digging into the documentation provided by the Docker team, it is important that you understand the Docker Dashboard. It is a panel that provides quick access to container logs and their life cycles. For example, it is extremely easy to move or delete containers from the dashboard.

Docker Container: does it require application development knowledge?

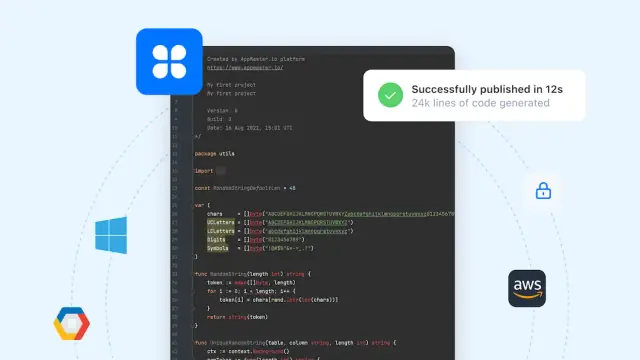

Using Docker, its container, and the Docker Compose tool requires some knowledge of programming languages, frameworks, and architecture. Docker can make the process easier, but only for those who are already familiar with programming and deploying applications. For beginners, development citizens, or anyone searching for the simplest way possible of deploying applications, there is, however, a valid alternative: no-code software development and AppMaster.

AppMaster is, in fact, a world-leading no-code platform that allows you to create a web app, mobile app, and backend without writing the code manually. AppMaster would provide building blocks for your project, as well as a visual interface where you can assemble them with a drag-and-drop system. While you create your software this way, AppMaster would automatically create the source code for you. The source code is accessible at any moment, and it is also exportable.

AppMaster's software building blocks can resemble Docker images in some way, but they provide you with the precious advantage that they can be assembled without writing source code at all. AppMaster can be a more suitable platform for you if you are a beginner.

Interesting fact AppMaster also uses a docker container. By default, all client applications are automatically hosted by AppMaster in isolated Docker Containers. Typically, when a client clicks the publish button, in less than 30 seconds, AppMaster generates source code, compiles, tests, and packages it in a Docker Container. The platform sends this Docker Container to the local AppMaster's docker, a hub that is in the platform. AppMaster, used by Harbor, is an open-source solution. And after that, AppMaster sends a command to the target server so that it picks up this container and launches it.

Conclusion

Docker is an important tool in the hands of developers. As we've seen, it resolves many of the limits that the older virtual machine approach had. It is still, however, a method suitable for experts developers: after all, while you can use templates and images, you still need to write code to do a lot of things within the Docker platform. If you are searching for an easier alternative, if you want to avoid writing code at all, AppMaster and the no-code approach are the perfect solutions for you.