Utilizing Docker for Microservices Architecture

Explore the benefits of utilizing Docker in a microservices architecture, including containerization and deployment strategies, to accelerate application development and enhance scalability.

Docker and Microservices

Microservices architecture has become increasingly popular in recent years, as it offers significant benefits regarding scalability, flexibility, and maintenance for software applications. At its core, microservices is an architectural pattern where a single application is composed of a collection of small, independent services, each responsible for a specific functionality and communicating with each other through APIs. This modularity allows for rapid development, deployment, easier testing, and convenient scaling of applications.

In this context, Docker emerges as a powerful tool for working with microservices. Docker is an open-source platform that facilitates application development, deployment, and management through containerization. It enables developers to package applications and their dependencies into lightweight, portable containers, ensuring that applications run consistently across different environments and stages of development. By leveraging Docker, developers can efficiently streamline the process of building, managing, and scaling microservices.

Why Use Docker for Microservices Architecture?

Docker and microservices are a natural fit for several key reasons.

Standardized Environment

Docker allows developers to create a standardized application environment by packaging all the necessary components, including the application itself, libraries, and dependencies, into a single, self-contained unit called a container. This standardization reduces the risk of environmental inconsistencies that can occur between development, staging, and production environments, ensuring that microservices consistently behave as expected.

Accelerated Development

Using Docker containers significantly accelerates development processes for microservices. Since each container is an isolated environment, developers can work on individual services without worrying about conflicting dependencies or libraries. Furthermore, Docker images can be easily shared among team members, allowing them to quickly deploy and run applications on their local machines, accelerating development and collaboration.

Enhanced Portability

Containers created with Docker are highly portable, allowing developers to move applications between different environments and platforms easily. This portability ensures that microservices can be deployed and run consistently on different systems, regardless of the underlying infrastructure. As a result, development teams can focus on building the best possible applications without worrying about system-specific nuances.

Reduced System Resource Usage

Microservices architecture can potentially lead to increased resource consumption as each service may run on separate machines, incurring overhead on system resources. Docker addresses this issue by creating lightweight containers that share the host system's underlying resources, reducing overall resource consumption as compared to running multiple virtual machines.

Simplified Microservices Management

Docker simplifies the management and monitoring of microservices by providing a consistent environment for deploying and running containers. Developers can use tools like Docker Compose to define the entire application stack, including individual microservices and their dependencies, making it easy to deploy and manage services coherently.

Containerizing Microservices with Docker

Containerizing microservices with Docker involves creating a Dockerfile containing instructions for building a Docker image. This section will guide you through the process of containerizing a sample microservice using Docker.

Create a Dockerfile

A Dockerfile is a script containing instructions for building a Docker image. The Dockerfile defines the base image, the application's source code, dependencies, and configurations needed for the service to run. Create a new file named `Dockerfile` in the root directory of your microservice.

Define the Base Image

Specify the base image for your microservice by adding the `FROM` command to your Dockerfile. The base image is the foundation for your container, providing the necessary runtime environment. Choosing an appropriate base image for your microservice is essential, like an official, minimal image provided by Docker or a custom image tailored to your needs. For example, if your microservice is developed in Node.js, you might use the following line in your Dockerfile:

FROM node:14

Set the Working Directory

Set the container's working directory using the `WORKDIR` command. This directory will be used to store the application's source code and dependencies.

WORKDIR /app

Copy the Source Code and Dependencies

Copy the source code and any required files from the local machine to the container using the `COPY` command. Additionally, install the necessary dependencies using package managers such as npm, pip, or Maven.

COPY package*.json ./

RUN npm install

COPY . .

Expose the Service Port

Expose the port on which the microservice will be accessible using the `EXPOSE` command. This will allow communication with the microservice from other containers or external services.

EXPOSE 8080

Run the Application

Start the microservice using the `CMD` command, specifying the command required to execute the application.

CMD ["npm", "start"]

After creating the Dockerfile, build the Docker image by running the following command in the same directory as the Dockerfile:

docker build -t your-image-name .

Finally, run the Docker container using the newly created image:

docker run -p 8080:8080 your-image-name

Your microservice is now containerized and running within a Docker container. This process can be repeated for each microservice in your application, allowing you to develop, test, and deploy your microservices in a streamlined, efficient, and consistent manner.

Deploying and Orchestrating Docker Containers

Deploying and orchestrating Docker containers is an essential part of managing microservices architectures. Container orchestration tools automate individual containers' deployment, management, and scaling, ensuring that the microservices work together efficiently. Two popular container orchestration platforms are Kubernetes and Docker Swarm.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates containerized applications' deployment, scaling, and management. It is widely adopted due to its powerful features and powerful ecosystem. Some key benefits of Kubernetes include:

- Scalability: Kubernetes uses declarative configuration to manage container scaling, making it easier to scale applications based on demand.

- High Availability: Kubernetes ensures high availability by distributing containers across different nodes and automatically managing container restarts in the event of failures.

- Load Balancing: Kubernetes can balance requests among multiple instances of a microservice, enhancing performance and fault tolerance.

- Logging and Monitoring: Kubernetes integrates with various logging and monitoring tools, simplifying the tracking of applications' health and performance.

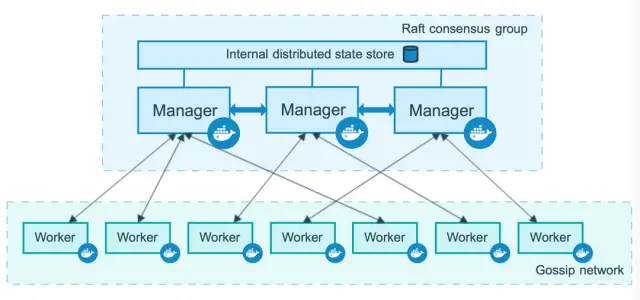

Docker Swarm

Docker Swarm is a native clustering and orchestration solution for Docker containers. It is integrated directly into the Docker platform, making it a simple and intuitive choice for Docker users. Docker Swarm offers the following advantages:

- Easy Setup: Docker Swarm doesn't require extensive installation or configuration. It operates seamlessly with the Docker CLI and API, making deploying and managing containers simple.

- Scaling: Docker Swarm allows users to scale services quickly and efficiently by adjusting the number of container replicas for each service.

- Load Balancing: Docker Swarm automatically distributes requests among containers, improving application performance and resilience.

- Service Discovery: Docker Swarm includes an embedded DNS server for service discovery, enabling containers to discover and communicate with one another.

Image source: Docker Docs

Both Kubernetes and Docker Swarm are popular orchestration tools for managing Docker containers in microservices architectures. Choosing the appropriate tool depends on the specific requirements of your application and the existing infrastructure and team expertise.

Creating a Dockerized Microservices Application

Let's walk through the steps to create a Dockerized microservices application:

- Design Microservices: Break down your application into multiple small, modular services that can be independently developed, deployed, and scaled. Each microservice should have a well-defined responsibility and communicate with others via APIs or messaging queues.

- Create Dockerfiles: For each microservice, create a Dockerfile that specifies the base image, application code, dependencies, and configuration required to build a Docker image. This image is used to deploy microservices as containers.

- Build Docker Images: Run the Docker build command to create Docker images for each microservice, following the instructions defined in the corresponding Dockerfiles.

- Create Networking: Establish networking between containers to enable communication between microservices. Networking can be done using Docker Compose or a container orchestration tool like Kubernetes or Docker Swarm.

- Configure Load Balancing: Set up a load balancer to distribute requests among microservices instances, ensuring optimal performance and fault tolerance. Use tools like Kubernetes Ingress or Docker Swarm’s built-in load balancing.

- Deploy Microservices: Deploy your microservices as Docker containers using the container orchestration platform of your choice. This will create an environment where microservices can run, communicate with one another, and scale on demand.

Once all these steps are completed, the microservices application will be up and running, with each microservice deployed as a Docker container.

Monitoring and Scaling Dockerized Microservices

Monitoring and scaling are essential to ensuring a Dockerized microservices application's performance, reliability, and efficiency. Here are some key strategies to consider:

Monitoring

Monitoring tools help track the health and performance of Docker containers, ensuring that your microservices are running optimally. Some popular monitoring tools include:

- Prometheus: A powerful open-source monitoring and alerting toolkit for containerized environments, including integrations with Grafana for visualization and alerting.

- Datadog: A comprehensive observability platform that can aggregate container metrics, logs, and traces, providing real-time insights into application performance.

- ELK Stack: A combination of Elasticsearch, Logstash, and Kibana, used for the centralized search, analysis and visualization of logs from Docker containers.

Ensure that your monitoring setup collects relevant metrics, logs, and performance data to identify potential issues and troubleshoot them effectively.

Scaling

Scaling Dockerized microservices involves adjusting the number of containers running each service to adapt to varying workloads and demands. Container orchestration platforms like Kubernetes and Docker Swarm facilitate automatic scaling, allowing you to focus on enhancing application functionality.

- Horizontal Scaling: Horizontal scaling involves increasing or decreasing the number of instances for each microservice based on demand. This can be achieved by adjusting the desired replicas for each service in the orchestration platform's configuration.

- Vertical Scaling: Vertical scaling entails adjusting the resources allocated to individual containers, such as CPU and memory limits. This ensures optimal resource utilization and can be managed through the orchestration platform's configuration.

By effectively monitoring and scaling Dockerized microservices applications, you can maximize efficiency and ensure high availability, performance, and resilience.

Best Practices for Docker and Microservices

Using Docker in a microservices architecture offers numerous benefits, but to maximize its potential and ensure a seamless development and deployment process, it's essential to follow some best practices:

- Minimize Docker image size: Keeping Docker images small helps reduce build times and resource consumption, which is particularly important in a microservices architecture. Utilize multi-stage builds, use small and appropriate base images, and remove any unnecessary files from the final image.

- Layered architecture for Docker images: Structure your Docker images using a layered architecture to speed up build times. Layers are cached by Docker during the build process, meaning that if a layer's contents haven't changed, it won't be rebuilt. Organize your Dockerfile to take advantage of this feature, placing frequently changed layers towards the end of the file.

- Consistent image tagging and versioning: Properly tag and version your images to easily track changes and roll back to previous versions if necessary. This helps in maintaining application stability and simplifies troubleshooting.

- Implement logging and monitoring: Incorporate logging and monitoring solutions to effectively manage and observe your containerized microservices. Docker provides native logging drivers, but you can also integrate third-party tools that are designed for microservices architectures, such as Elasticsearch, Logstash, and Kibana (ELK Stack) or Prometheus.

- Adopt container orchestration platforms: Use container orchestration tools like Kubernetes or Docker Swarm to automate deployment, scaling, and management tasks. These tools handle complex tasks like load balancing, rolling updates, and automated scaling, ensuring your microservices function efficiently and effectively.

- Enhance security: Improve the security of your containerized microservices by applying the principle of least privilege, using secure base images, and minimizing the attack surface by limiting the number of installed packages. Enable network segmentation between services and scan for vulnerabilities in your Docker images.

- Use environment variables for configuration: Decouple configuration from Docker images and use environment variables to better separate concerns. This ensures that a single Docker image can be configured differently for various environments, enhancing flexibility and reducing duplication.

Conclusion

Utilizing Docker in a microservices architecture allows developers and organizations to reap the full benefits of containerization, leading to more agile, efficient, and scalable applications. By following the best practices outlined above, you can seamlessly integrate Docker into your development and deployment processes in a microservices architecture, transforming how you build and maintain your applications.

Moreover, integrating Docker with no-code platforms like AppMaster can help elevate your application development experience. AppMaster enables users to visually create web, mobile, and backend applications, and the generated source code can be containerized and managed using Docker for smooth and scalable deployment. Combining the power of Docker and AppMaster can greatly enhance the application development process, making it more efficient, cost-effective, and faster than ever before.

FAQ

Docker is an open-source platform for containerizing applications, making it easier to build and deploy distributed systems, including microservices. It automates the deployment of applications in lightweight, portable containers, facilitating collaboration and ensuring consistency between environments.

Microservices are a software architecture pattern where a single application is designed as a collection of small, modular, and independently deployable services. Each service performs a specific functionality, communicates with other services through APIs, and can be updated, deployed, and scaled independently.

Docker streamlines the development, deployment, and scaling of microservices by providing a standardized environment for containerizing applications. This accelerates development, enhances portability, reduces system resource demands, and simplifies management and orchestration of microservices.

To containerize a microservice using Docker, you write a Dockerfile that contains instructions for building a Docker image of the service. The Dockerfile defines the base image, the application's source code, dependencies, and configurations. Docker images are run as containers, providing a consistent environment for microservices across different stages of development and deployment.

Deployment and orchestration of Docker containers can be done using container orchestration tools, such as Kubernetes or Docker Swarm. These tools automate container deployment, scaling, and management tasks, facilitating seamless and efficient operation of containerized microservices.

Some best practices for using Docker with microservices include: 1. Minimize image size by using suitable base images and removing unnecessary files. 2. Use a layered architecture for Docker images to accelerate build times. 3. Apply consistent tagging and versioning for images. 4. Implement logging and monitoring solutions. 5. Adopt container orchestration tools for managing and scaling containers.

Yes, Docker can be used with no-code platforms like AppMaster for deploying scalable and efficient backend and web applications. AppMaster enables users to visually create applications and generate source code, which can be containerized using Docker for seamless development and deployment.

Docker simplifies monitoring and scaling microservices by allowing seamless integration of monitoring tools, delivering better resource utilization, and facilitating automatic scaling through container orchestration platforms. Docker can also help ensure application performance and consistency by providing a standardized environment for running and managing microservices.