Debugging Intelligence: Tips and Tricks for Coding AI Tools

Master the art of debugging AI tools with expert tips and tricks for coding AI tools. Enhance the performance and accuracy of your AI applications.

Debugging is a critical component of software development, a systematic process to diagnose and fix bugs, performance bottlenecks, and other issues that prevent software from functioning correctly. When it comes to Artificial Intelligence (AI) tools, the complexity of debugging increases manifold due to the unique challenges posed by these data-driven systems.

Unlike traditional software, AI tools largely rely on the quality and intricacies of data they are trained on. They involve sophisticated algorithms that learn from this data and make predictions or take actions based on their learning. Consequently, debugging AI requires a technical understanding of programming and software engineering and a grasp of the particular AI domain — be it machine learning, deep learning, natural language processing, or others.

At the heart of AI tool debugging is the pursuit of transparency and reliability. AI developers and engineers strive to demystify the 'black box' nature of AI applications to ensure they perform as expected and can be trusted with the tasks they are designed for. This involves rigorous testing of the AI models, thorough inspection of the data pipelines, validation of results, and continuous monitoring of the deployed AI applications.

To effectively debug AI tools, one has to navigate through complex layers of abstraction — ranging from raw data preprocessing, feature extraction, and model training to hyperparameter tuning and model deployment. It is essential to methodically track down the source of any unexpected behavior or outcome, which could stem from numerous factors such as algorithmic errors, data corruption, or model overfitting.

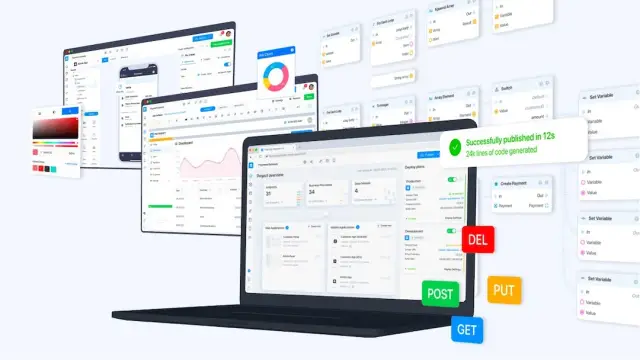

In this intricate detection and correction process, tools and practices like version control, interactive development environments, visualization tools, and modular coding play a pivotal role. Moreover, embracing a no-code platform like AppMaster can facilitate rapid development and debugging of AI tools by providing a visual development environment and automating many routine coding tasks.

As we delve deeper into the nuances of debugging AI tools, it is crucial to understand that it is an iterative and often complex endeavor, one that demands patience, skill, and a keen analytical mind. The next sections will explore the challenges particular to AI, strategies for effective debugging, the role of automation and human insight, and real-world case studies that highlight the practical applications of these techniques.

Understanding AI-Specific Debugging Challenges

AI systems possess unique characteristics that make debugging a particularly intricate affair. Unlike traditional software, where bugs typically stem from logic errors or issues in the codebase, AI systems intertwine code with data and learning algorithms. This blend introduces a complex set of challenges that require specialized debugging techniques.

The data largely determines the behavior of an AI model it's trained on. Thus, AI-specific debugging often begins with a thorough examination of this data. A common issue is the presence of biases in the training set, which can lead to skewed or unfair predictions. Debugging then requires identifying these biases and understanding their root causes and ramifications to rectify the problem without introducing new issues.

Another significant challenge in AI debugging is dealing with the stochastic nature of many AI algorithms. Outcomes can vary even with the same input data, due to random initializations or inherent variability in training processes like stochastic gradient descent. Consequently, replicating issues can be frustratingly difficult, and one must employ statistical methods or fix initial seeds to ensure consistent behavior for a thorough investigation.

Overfitting and underfitting are two sides of the same coin that complicates the debugging process. Overfitting occurs when a model is too complex and learns to memorize the training data, including noise and outliers, rather than generalizing from patterns. Conversely, underfitting results from overly simplistic models that fail to capture the underlying structure in the data. Debugging these problems involves tweaking the model's complexity, often done through cross-validation and regularization techniques.

Model interpretability also poses a substantial challenge. Some AI models, such as deep neural networks, are often called "black boxes" because of the difficulty in understanding exactly how they make decisions. This can be a severe obstacle in debugging, as it’s crucial to understand the reason behind a model’s output to address the underlying issue. Techniques like feature importance analysis, model distillation, and visualization of network layers can sometimes shine a light on the dark corners of these complex models.

Finally, the rapidly evolving nature of AI tools and frameworks can make debugging an ever-moving target. With frequent updates and emerging best practices, developers must constantly stay on their toes to ensure that they're not facing issues that have already been resolved in newer releases, or that their debugging tools are in line with the latest advancements in the field.

Debugging AI tools requires a blend of domain expertise, methodical analysis, and often a bit of creativity. As an AI developer, understanding these AI-specific challenges and preparing to approach them methodically can greatly enhance the debugging process, leading to more reliable AI applications.

Setting up a Reliable Debugging Environment

Developing AI tools requires meticulous attention to detail during the creation phase and when problems arise. To effectively debug AI systems, developers need a systematic environment to handle the complexity of AI algorithms and datasets. The following techniques will guide you in establishing such an environment, enhancing your ability to identify, isolate, and resolve issues within AI tools.

Firstly, create a version-controlled workspace for both your code and your datasets. Version control systems like Git help track changes and enable rollback to previous states if new bugs are introduced. Additionally, tools like DVC (Data Version Control) can be used specifically for managing and versioning datasets, which is crucial as data is often at the heart of issues in AI systems.

Ensure your debugging environment includes data validation. Data quality is paramount; it should be verified for correctness, consistency, and absence of bias before being fed into the model. A reliable setup will incorporate automated scripts that check data integrity at regular intervals or before each training session. This preemptive measure can help prevent many issues related to data handling.

Another key factor is setting up experiment tracking. AI development involves many experiments with different hyperparameters, datasets, and model architectures. Tools like MLflow, Weights & Biases, or TensorBoard allow you to track, visualize, and compare different experiments. This systematic approach aids in understanding model behavior and identifying why certain changes might have led to bugs.

Furthermore, leverage continuous integration and testing practices. These are not jus t for traditional software development; they are equally important in AI. Automated testing can ensure that small pieces of the AI system work as expected after changes. Continuous integration servers can run your data validation, training scripts, and tests automatically on new commits to the codebase, alerting you to issues immediately.

Visualization tools form an integral part of debugging AI applications. For instance, using tools to visualize the computational graph of a neural network can help you see where things might be going wrong. Similarly, visualizing the data distributions, model predictions versus actual results, and training metrics can highlight discrepancies that may point to bugs.

In the end, complement the technological tools with comprehensive documentation that includes details about data sources, model architectures, assumptions, experiment results, and troubleshooting measures taken. This resource will prove invaluable for current debugging and future maintenance and updates, offering clarity and continuity in your development efforts.

In conjunction with these strategies and the no-code capabilities of AppMaster, developers can significantly reduce AI system bugs. The platform's visual tools and automated code generation simplify aspects of AI development that can otherwise be error-prone, assisting developers in creating, deploying, and maintaining high-quality AI applications with ease.

Debugging Strategies for Machine Learning Models

Machine learning (ML) models can sometimes feel like black boxes, presenting unique challenges when it comes to debugging. Unlike systematic logic errors that often plague traditional software, ML models suffer from problems rooted in data quality, model architecture, and training procedures. To effectively debug ML models, developers must employ a strategic approach addressing these unique complexities.

Start with a Solid Foundation: Data Verification

Before delving into the computational aspects of debugging, it's crucial to ensure your data is correct and well-prepared. Issues like missing values, inconsistent formatting, and outliers can significantly skew your model's performance. This first step involves rigorous data cleaning, normalization, and augmentation practices, as well as sanity checks for data distribution that could highlight potential biases or errors in the dataset.

Simplify to Clarify: Model Reduction

When faced with a problematic model, reduce its complexity to isolate issues. By scaling down the number of features or simplifying the architecture, you can often pinpoint where things go awry. If a reduced model still displays issues, the fault may lie within the data or the features used. Conversely, if simplification resolves the problem, the complexity of the original model could be the culprit.

Visualize to Understand: Error Analysis

Leverage visualization tools to analyze error patterns. Plotting learning curves can indicate model capacity issues such as overfitting or underfitting. Examining confusion matrices and receiver operating characteristic (ROC) curves for classification tasks helps identify classes the model struggles with, suggesting areas that may require more nuanced features or additional data to improve performance.

Ensure Reproducibility: Version Control & Experiment Tracking

For debugging to be effective, each experiment needs to be reproducible. Version control systems like Git should be used not just for code, but also for tracking changes in your datasets and model configurations. Experiment tracking tools are essential for comparing different runs, understanding the impact of modifications, and systematically approaching model improvement.

Traditional Techniques: Unit Testing & Continuous Integration

Applying software engineering best practices to ML development is often overlooked but crucial. Implement unit tests for data processing pipelines and individual model components to ensure that they function as expected. Continuous integration (CI) pipelines help in running these tests automatically, catching errors early in the development cycle.

Debugging by Probing: Use of Diagnostic Tools

Diagnostic tools are valuable for digging deeper into model behavior. Techniques like feature importance analysis and partial dependence plots reveal insights into which features most significantly influence the model's predictions. Additionally, model distillation, where a simpler model is trained to approximate the predictions of a complex one, can highlight which aspects of the training data the original model is focusing on, which might lead to discovering mislabeled data or overemphasized features.

The Power of Ensembles: Combining Models for Insight

Debugging can also come from model ensembles. By combining various models, you can evaluate their consensus and identify any individual model that significantly deviates from others, which might be symptomatic of an issue within that particular model's training or data processing.

Human-Centered Debugging: Engage Domain Experts

Humans should not be out of the loop when debugging AI. Engage with domain experts who understand the data and can provide valuable insights into whether model outputs make sense. They can help identify inaccuracies that might go unnoticed by purely data-driven metrics.

Iterative Improvement Is Key

In the end, debugging ML models is an iterative process. Each loop gives you a deeper understanding of your model and the data it learns from. These strategies provide guidelines, but they must be adapted to the context of your specific project and the type of ML model you are developing. Remember to use no-code platforms like AppMaster, which offer tools to help streamline the initial stages of model development and provide a foundation for further debugging and refinement.

Common Pitfalls and How to Avoid Them

As AI grows in complexity and diversity, developers frequently confront unique challenges that can derail the debugging process. Recognizing and circumventing these pitfalls is crucial for creating and maintaining effective AI tools. Here, we delve into some of the most common pitfalls in AI tool debugging and present strategies to avoid them.

Ignoring Model Generalization

One of the most prevalent issues in AI development is creating a model that performs exceptionally well on your training data but fails to generalize to new, unseen data — overfitting. To avoid this, it’s essential to:

- Utilize a comprehensive dataset that reflects the real-world situations the model will encounter.

- Employ techniques like cross-validation and regularization.

- Constantly test your models on validation and test datasets.

Overlooking Data Quality

Garbage in, garbage out; this truism is especially relevant in AI. Poor data quality can completely skew your model's performance. To side-step this pitfall:

- Ensure thorough data cleaning and preprocessing.

- Implement anomaly detection to catch outliers and incorrect values.

- Focus on collecting diverse and representative data to avoid biases.

Lack of Version Control

Without meticulous version control for your AI models and datasets, reproducing results and tracking changes becomes burdensome. Embrace tools for versioning and experiment management to:

- Keep a detailed log of data revisions, model parameters, and code changes.

- Organize your development process to maintain consistency across debugging sessions.

Underestimating the Complexity of the Model

Complex models are not always superior — sometimes, they’re just more difficult to debug. Start simple and gradually increase complexity only if needed. Relying on simpler models can often lead to more transparent and interpretable results, making the debugging process more manageable.

Neglecting Debugging Tools

Foregoing the use of specialized debugging tools can lead to significantly longer troubleshooting times. Utilize platforms like AppMaster to streamline development with its visual debugging tools and features that enable developers to visualize business processes and data flow without delving into code.

By anticipating these common pitfalls and implementing strategies to counteract them, developers can mitigate many of the frustrations commonly associated with debugging AI tools and pave the way for smoother, more efficient debugging sessions.

Automated Tools vs Human Intuition in AI Debugging

In AI, debugging extends beyond systematic logic errors to encompass the nuanced interpretations of data and model behaviors. The rise of automated debugging tools suggests a transformation in how developers approach problem-solving in AI. Yet, despite sophisticated advancements, human intuition remains an indispensable asset throughout the debugging process. This section delves into the dynamic interplay between automated tools and human intuition in the arena of AI debugging.

Automated tools in AI debugging serve as the first line of defense. They are adept at identifying straightforward bugs, such as syntax errors, exceptions, and runtime errors, which may otherwise consume valuable time if addressed manually. These tools, powered by AI themselves, can streamline testing by swiftly analyzing vast arrays of code to pinpoint anomalies. Technologies such as predictive analytics and anomaly detection algorithms bear the potential to discern patterns and predict issues before they fully manifest, effectively acting as a preventative measure in the troubleshooting process.

However, AI systems are defined by their complexity and uniqueness; they learn and adapt based on data. Automated tools can falter when faced with the abstract nature of AI issues, such as those related to data quality, model architecture, or the subtleties of hyperparameter tuning. Here is where human intuition and expertise step in. Human developers bring their technical knowledge, nuanced understanding of context, ability to hypothesize about unstructured problems, and creative problem-solving skills to the table.

Sometimes, the sheer unpredictability of AI requires a human's informed guesswork to root out less obvious discrepancies. For example, when an AI model generates unexpected results, automated tools can report the anomaly, but it's often the developers' intuition that guides them to the underlying cause — be it an issue with training data, model overfitting, or something more surreptitious like a subtle logic bug in the data pre-processing stage.

Moreover, human oversight is critical when interpreting the results of automated debugging. Human judgment is needed to prioritize which bugs are worth pursuing based on their potential impact on the system. Furthermore, debugging AI systems can introduce ethical considerations — such as privacy violations or biased outcomes — that automated tools are inherently unequipped to handle. With their human empathy and ethical reasoning, developers are best positioned to navigate these sensitive areas.

It is important to recognize that automated tools aim not to replace human developers but to augment their abilities. For instance, within the AppMaster platform, automated tools simplify the no-code development and debugging process. The platform's features enable visual debugging, allowing developers to observe and interact with data flows and logic paths more intuitively. Thus, AppMaster is a testament to the synergy between automated systems and human insights, presenting a cooperative problem-solving model that harnesses both worlds' strengths.

While automated tools provide efficiency and speed in routine debugging tasks, they do not negate the need for human intuition which remains crucial for handling the intricacies of AI systems. A harmonious blend of automated software and human expertise not only speeds up the debugging process but also ensures a more reliable and performant AI tool as the end result. As AI continues to evolve, so too will the methodologies and repositories of tools aimed at demystifying its complexities — always with the human element at their core.

Case Studies: Debugging AI in Action

Even the most meticulously designed AI systems can exhibit unexpected behavior or errors once they interact with real-world data and scenarios. Through examining case studies, developers can gain insights into successful debugging strategies and thus refine their approach to creating more reliable and powerful AI tools. Let's delve into some notable case studies that shed light on the complexities of AI debugging.

- Case Study 1: Diagnosing Overfitting in a Predictive Model: A retail company developed a machine learning model to forecast future product demand based on historical sales data. However, the model's predictions weren’t aligning with actual outcomes. Developers discovered the model was overfitting during the training phase, learning noise and anomalies in the training data rather than the underlying patterns. They used techniques like cross-validation and introduced a regularization parameter to mitigate overfitting, which resulted in a model that generalizes better to unseen data.

- Case Study 2: Tackling Data Bias in Facial Recognition Software: An AI company faced public backlash when their facial recognition software exhibited bias, performing poorly with certain demographic groups. On debugging, the team realized the training data lacked diversity. By collecting more representative data and employing fairness algorithms, they improved the accuracy and reduced bias in their software, demonstrating the importance of data quality and diversity in AI model training.

- Case Study 3: Improving Natural Language Processing Accuracy: A startup developed a natural language processing (NLP) tool that wasn’t classifying customer feedback accurately. The debugging process revealed that the word embeddings used in the model were insufficient in capturing the context of certain industry-specific terms. The startup significantly enhanced the tool's accuracy in understanding customer sentiments by customizing the word embeddings and including domain-specific data in their training sets.

- Case Study 4: Debugging Autonomous Vehicle Algorithms: A company specializing in autonomous vehicles encountered critical issues where the car misinterpreted stop signs in certain weather conditions. Debugging revealed that the vision algorithms relied too heavily on color detection. By integrating more contextual cues and sensor fusion techniques, the engineers were able to make the AI’s interpretation of traffic signs much more reliable.

Each case study emphasizes a unique aspect of AI debugging, highlighting the diverse challenges developers may encounter. Whether it is dataset quality, model complexity, algorithm biases, or the adaptability of a system, recognizing and addressing these issues through strategic debugging is essential. While case studies provide valuable lessons, platforms such as AppMaster empower developers by reducing the complexity of building and debugging AI tools, enabling even those with little coding experience to learn and apply the lessons from real-world AI challenges.

Integrating Debugging Techniques with AppMaster

When developing AI tools, proper debugging is essential to ensure your applications' performance, reliability, and accuracy. By now, developers have many techniques for tackling traditional debugging scenarios. However, AI introduces complex challenges due to its data-centric nature and often opaque decision-making processes. Given its immense potential to simplify and enhance the development process, integrating AI debugging with no-code platforms like AppMaster offers a seamless and efficient pathway towards ironing out those intricate issues that might arise from AI's inherent complexity.

AppMaster is a no-code development platform that excels at streamlining the creation of web, mobile, and backend applications. By utilizing AppMaster, developers are provided a powerful set of tools that can bolster their AI debugging strategies:

- Visualization: Debugging AI often requires an understanding of data relationships and model behavior. AppMaster furnishes developers with visual tools, such as business processes (BPs) designer, which can translate complex logic into understandable and modifiable visual components. This is particularly useful in AI applications where workflows and decision points must be visualized to assess the correctness of data processing and AI model inference.

- Automated Testing: Debugging is not a one-and-done process – it requires continuous reevaluation. The platform provides automated testing capabilities that enable developers to create and run tests efficiently after every change, ensuring that the AI's core functionality remains intact and that any potential regression is caught early.

- Modularity: AI debugging may call for iterative overhauling in certain application parts while leaving others untouched. AppMaster promotes modularity through its ability to segment applications into different microservices. Cockpit areas of an AI tool can be isolated for focused debugging without hampering the full application's operation.

- Data Integrity: The data fueling AI tools needs to be validated rigorously. Errors in data will inevitably lead to faulty AI behaviors. AppMaster incorporates mechanisms for ensuring data integrity, including built-in validation rules that can preempt typical data-related issues before they cascade into larger, more complex problems.

- Collaboration Features: Often, debugging an AI tool is a team effort. The platform's collaborative environment allows multiple developers to work on the project simultaneously, making troubleshooting issues as they surface and collectively validate fixes easier.

- Deployment and Hosting: With AppMaster, developers can deploy their applications at the click of a button. This rapid deployment allows fast iteration cycles for testing and debugging AI applications in a staging or production-like environment, ensuring that real-world scenarios are accounted for.

- Analytics and Monitoring: Post-deployment, keeping tabs on an AI application's performance is vital to spot anomalies that may signify bugs. AppMaster can offer analytics and monitoring solutions to track the application's behavior, providing empirical data useful for ongoing debugging efforts.

Furthermore, the no-code nature of AppMaster expedites the debugging process by abstracting the intricacies of code, enabling developers to focus on the logic and performance of their AI tools rather than being bogged down by syntax or structural errors. The platform's capability to auto-generate documentation and Open API specifications also aids in the debugging process by ensuring that the entire team is always on the same page regarding the tool's current build and behavior.

In the journey of AI tool development, incorporating platforms like AppMaster stands as a testament to the harmonious blend of no-code agility and sophisticated AI debugging approaches. It illustrates the future of software development, where the intricacies of debugging are not a bottleneck but rather an orchestrated part of the developmental symphony.

Tips for Efficient Debugging Workflows

Streamline Your Debugging Tools

One of the first steps towards an efficient debugging workflow is to equip yourself with the right tools. You’ll need programs that can handle complex data sets and algorithms, such as interactive debuggers, visual data analysis tools, and automated testing suites. An integrated development environment (IDE) that offers AI-specific plugins and extensions can save time and enhance productivity. When tools are consolidated into a seamless workflow, they enable more rapid identification and resolution of bugs.

Embrace Version Control

Version control is not just about keeping track of code changes; it is also essential for managing the datasets your AI operates on. Platforms like Git can be used to manage both code and data, allowing for better tracking of changes and the ability to roll back to a previous state when something goes wrong. Additionally, you can branch out experimental features or data transformations, so they don’t interfere with the main development line.

Prioritize Bugs Effectively

Not all bugs are created equal. Some affect functionality significantly while others have a minimal impact on the AI application. Evaluating and prioritizing bugs based on their severity and impact on the software’s performance is crucial to maintaining an efficient workflow. Using the Eisenhower matrix, which divides tasks into urgent/important matrices, can help categorize and prioritize issues.

Automate Repetitive Tasks with Scripts

Debugging often involves repetitive tests and checks. By writing scripts to automate these tasks, you can save valuable time and reduce human error. Scripts can run through predefined debugging procedures, allowing you to focus your attention on the more intricate and unique debugging challenges. Continuous integration tools can help you automatically trigger such scripts based on code or data commits.

Document Everything Rigorously

Documentation is often treated as an afterthought but having detailed records of what each part of your AI system should do, alongside notes on past bugs and fixes, can be a lifesaver during debugging. This practice enables any developer to quickly understand decisions made earlier in the development cycle. Moreover, documenting debugging sessions can help identify recurring issues and understand your AI tools' long-term behavior.

Establish Clear Communication Channels

In a team environment, effective communication is paramount. Clear channels for reporting, discussing, and resolving bugs must be established. This includes regular meetings, concise reporting formats, and shared dashboards. In addition, creating a culture that encourages open discussion about bugs can promote a more collaborative and efficient approach to resolving them.

Leverage Automated Logging and Monitoring

Implementing automated logging and monitoring tools can provide consistent and objective insights into your AI's behavior. These tools can detect anomalies and performance issues in real-time, which is essential for both immediate troubleshooting and long-term maintenance. Moreover, advanced monitoring can help identify patterns that lead to bugs, providing valuable data to prevent them in future development cycles.

Use Feature Flags for Safer Deployment

Introducing feature flags allows you to roll out new functionalities gradually and control who gets access to them. This practice can contain the impact of undetected bugs by exposing them to a small user base initially. Furthermore, if a bug is identified post-release, feature flags enable you to rollback easily without affecting other aspects of the AI application.

Continuous Learning and Adaptation

Debugging is not just about fixing what’s broken. It’s about learning from the mistakes and improving processes. An efficient debugger will keep abreast of new tools, techniques, and platforms that can simplify and enhance their workflow. For instance, platforms like AppMaster offer a no-code solution for rapidly developing and deploying AI tools with integrated capabilities for debugging and monitoring, significantly reducing the resources spent on these tasks.

Maintaining a Healthy Debugging Mindset

Finally, maintain a positive and inquisitive mindset. Seeing bugs as challenges rather than problems can be motivating. Remember that each bug is an opportunity to understand the AI system better and improve its robustness. Maintain patience, and always approach debugging methodically yet creatively.

By implementing these tips and continuously refining your debugging processes, you can ensure a smoother and more efficient workflow, ultimately leading to the development of reliable and high-performing AI applications.

Maintaining AI Tools Post-Debugging

After successfully navigating the labyrinth of debugging your AI tools, the journey doesn't terminate there. Like a finely crafted timepiece, AI tools require continuous maintenance and oversight to ensure they operate at peak performance. Here, we delve into a sensible approach for maintaining AI tools after the rigorous debugging process, offering you strategies to future-proof your investment and ensure persistent, consistent results.

Continuous Monitoring for Model Performance

AI models are susceptible to 'model drift' as data and real-world circumstances evolve over time. Implementing a mechanism for continuous performance monitoring is imperative. Such systems alert developers to potential decreases in accuracy or effectiveness, allowing for timely adjustments. This vigilant approach means models retain their relevance and continue delivering valuable insights.

Regular Updates to Models and Data Sets

AI's appetite for data is insatiable and its performance, intrinsically linked to the quality and volume of the ingested information. Hence, feeding it fresh, high-quality data and revisiting and refining models is crucial. As new patterns emerge and old ones fade, your AI tools should evolve to stay attuned to these shifts. Periodic retraining with up-to-date data isn't just recommended; it's required to stay competitive and effective.

Ongoing Testing and Validation

While you've ironed out the kinks during the debug phase, there's no reason to put down the testing toolkit. Like a vigilant sentinel, you need to continually test and validate your AI models against new scenarios, edge cases, and datasets. Automated testing pipelines can greatly assist here, becoming your relentless allies in maintaining the resilience and reliability of your AI tools.

Documentation and Version Tracking

Maintaining meticulous documentation isn't simply an exercise in due diligence — it's a beacon for anyone who will interact with your system in the future. Clear records of changes, decisions, and architecture alterations create a roadmap that can guide maintenance teams, reduce onboarding time for new developers, and significantly aid when unexpected issues arise.

Adapting to User Feedback and Market Changes

An AI tool, however technically proficient, must ultimately serve the needs and solve the problems of its users. Obtaining and acting on user feedback is paramount in ensuring that the AI remains relevant and user-centric. Similarly, the market it operates in is dynamic, and staying ahead necessitates that your AI adapts to regulatory changes, competitive pressures, and technological advancements.

Implementing Ethics and Bias Checks

AI's profound impact extends further into ethical territories and potential biases. Ongoing audits and checks to correct data imbalances, remove prejudices, and ensure fair and ethical outputs are non-negotiable responsibilities for AI tool custodians.

The Role of AppMaster

Platforms like AppMaster can serve as valuable assets by providing a foundation for rapid, no-code application development and easy iteration in maintaining AI tools post-debugging. Seamlessly integrating AI functionalities with traditional app components helps balance innovation and stability. With its automated code generation, AppMaster helps minimize maintenance overhead, allowing teams to focus on value-adding activities such as model enhancement and performance optimization.

By heeding these strategies, you can foster a consistent level of performance for your AI tools while staying agile and prepared for the continuous evolution of the AI field. This proactive maintenance plan is not only about preserving functionality but is also about advancing and elevating your AI tools to meet and exceed the demands of tomorrow.

Conclusion: A Roadmap to Flawless AI Applications

In developing AI tools, debugging is an indispensable part of crafting intelligent and reliable applications. It's a process that demands both meticulous attention to technical detail and a broad understanding of complex systems. As we've delved into the sophisticated world of AI debugging, we've seen that it's not just about fixing errors. Rather, it's an opportunity to optimize performance, enhance accuracy, and ultimately trust the AI solutions we create.

The roadmap to flawless AI applications is clear but requires dedication and strategic execution. Begin with an understanding of the unique challenges presented by AI systems. Embrace the intricacies of data-driven behaviors, keep a vigilant eye on model performance, and use debugging as a lens to refine and comprehend the nuances of your AI. The tactics shared in this discourse, from setting up a reliable environment to selecting the right debugging strategies, guide you to an efficient workflow.

AI tools can be highly complex, and no single debugging journey will be the same. Therefore, maintaining a sense of flexibility and continuous learning is essential. Keep up to date with the latest trends, tools, and methodologies in AI debugging. And remember, the human element–your intuition and expertise–will always be a valuable asset when combined with the intelligence and automation provided by platforms like AppMaster. Here, you'll find a balance between the advanced capabilities of automated tools and the discernment of a seasoned developer.

Finally, the maintenance of AI applications post-debugging shouldn't be underestimated. You've worked hard to achieve a state of minimal bugs and smooth operations, and it's crucial to preserve this by monitoring, updating, and testing your systems consistently.

The roadmap to mastering AI tool debugging is not a linear path but a continual improvement cycle. It demands a synergy of cutting-edge technology, such as the no-code solutions provided by AppMaster, and the irreplaceable creative problem-solving skills of developers. By following this roadmap, developers can ensure that their AI applications aren't just functioning but are performing at the apex of their potential, providing value and innovation in a technologically dynamic world.

FAQ

Debugging AI tools involves the intricacies of data-driven behaviors, which means you must consider the quality of data, model architecture, and training processes while debugging.

Some of the common challenges include overfitting, underfitting, data bias, and dealing with non-deterministic outputs.

A reliable AI debugging environment includes version control for code and data, thorough data validation, and tools for experiment tracking and visualization.

Strategies include using a modular approach, simplifying the model, visualizing model behavior, and employing techniques like unit testing and continuous integration.

While automated tools can greatly aid the process, human intuition plays a key role in understanding context, interpreting data, and making strategic decisions.

AppMaster provides a no-code platform that simplifies the development and debugging of AI applications with visual debugging tools and automated code generation.

You can maintain efficiency by focusing on reproducibility, staying organized with documentation, and prioritizing the debugging of impactful bugs.

Post-debugging maintenance should involve continuous monitoring, regular updates to the model and dataset, and ongoing testing to prevent new issues.

Yes, AI techniques can be applied to automate certain aspects of the debugging process, such as anomaly detection and predictive maintenance.

Analyzing real-world case studies can provide insights into effective strategies and common errors, equipping you with practical knowledge for debugging AI tools.

Common pitfalls include neglecting data quality, ignoring model bias, over-complicating the model, and failing to validate outcomes against real-world scenarios.