Data Modeling in RDBMS: From Conceptual Design to Implementation

Master the process of data modeling in relational databases from concept to implementation. Understand the steps, techniques, design methodologies, and tools essential for efficient development.

Data modeling is a vital process in software development and database design. It involves creating a visual representation of an organization's data and the relationships between different entities. By effectively mapping out the structure, storage, and access methods for data elements, developers and analysts can ensure efficient organization and retrieval of data within a system.

Relational database management systems (RDBMS) rely heavily on data modeling to build well-structured and efficient databases. Data models in RDBMS help define the database structure, specifying the entities, attributes, relationships and constraints. A properly designed data model can improve data consistency, reduce redundancy, and optimize the performance of database operations.

This article provides an overview of the data modeling process in RDBMS, the different types of data models, and the techniques necessary for efficient and effective data modeling.

The Data Modeling Process

The process of data modeling involves several steps, which can roughly be grouped into the following stages:

- Requirements Analysis: The first step in data modeling is to gather and analyze business requirements. This involves understanding the purpose and objectives of the database, the data elements to be stored, and the relationships between them. It also includes identifying constraints, assumptions, and business rules that govern the use of data within the system.

- Design: Based on the requirements analysis, the data model is designed to fulfill the organization's specific needs. This involves choosing appropriate data structures, defining the entities, attributes, and relationships, and specifying constraints and other rules. Depending on the level of abstraction required, a data model can be designed at the conceptual, logical, or physical level.

- Validation: Once the data model is designed, it needs to be validated to ensure that it accurately represents the business requirements and meets desired performance and usability standards. Validation involves checking the model for errors, inconsistencies, and redundancies and confirming that it adheres to data modeling best practices.

- Implementation: After the data model is validated, it is used to guide the actual implementation of the database in a specific RDBMS. This includes creating the tables, establishing relationships, defining primary and foreign keys, and implementing constraints, triggers, and other database objects. Depending on the choice of RDBMS, certain tweaks and optimizations may be required to fine-tune the model for optimal performance.

- Maintenance: Once the database is implemented, the data model and associated documentation should be updated and maintained as the system evolves. This includes modifying the model to reflect changes in requirements, correcting errors, and applying optimizations for improved performance.

Types of Data Models

Three main types of data models are used in RDBMS: conceptual, logical, and physical. Each type of data model serves a different purpose and represents a different level of abstraction.

Conceptual Data Models

A conceptual data model is a high-level, abstract representation of the organization's data. It focuses on capturing the entities, their attributes, and relationships without specifying any implementation details. The main goal of conceptual data modeling is to clearly understand the business requirements and facilitate communication between stakeholders, such as business analysts, developers, and end-users.

Logical Data Models

A logical data model is a refinement of the conceptual data model, where entities, attributes, and relationships are further detailed and organized. Additional constraints and rules are defined in this stage, and data elements are organized into tables and columns. The logical data model is the basis for the physical data model, which focuses on the actual implementation details in a specific RDBMS.

Physical Data Models

A physical data model is the final step in the data modeling process and represents the actual implementation details in a specific RDBMS. It includes the technical specifications necessary for creating the database structure, such as table and column names, storage requirements, and index types. Physical data modeling focuses on optimizing the performance of database operations based on the specific features and characteristics of the chosen RDBMS.

Conceptual Data Modeling

Conceptual data modeling represents the first step in the data modeling process, focusing on the high-level, abstract view of an organization's data requirements. It involves identifying the key data entities, their attributes, and the relationships between them without going into specific details about data types or storage. The main goal of conceptual data modeling is to clearly understand the business requirements and form a solid foundation for the next stages of data modeling (logical and physical modeling).

Components of Conceptual Data Modeling

The primary components of conceptual data modeling are:

- Entities: Represent the key objects or concepts in the domain, such as customers, products, orders, or employees.

- Attributes: Define the properties of the entities, such as customer name, product price, order date, or employee ID.

- Relationships: Represent the associations between entities, such as a customer placing multiple orders, a product belonging to a category or an employee working in a specific department.

Creating a Conceptual Data Model

Creating a conceptual data model involves several steps:

- Identify Entities: List the key entities in your domain that will be included in the database. Think about what objects are of primary importance and require storage and retrieval.

- Define Attributes: Determine the attributes of each entity relevant to your data model's scope. Focus on the main properties of each entity without diving into specifics such as data types or constraints.

- Establish Relationships: Analyze the connections between entities and define the existing relationships, ensuring that the proposed relationships make sense from a business perspective.

- Review and Refine: Review the initial conceptual model, looking for inconsistencies, redundancies, and missing information. Update the model as necessary to improve its accuracy and completeness.

At the end of the conceptual data modeling process, you will have a clear, high-level representation of your data model, which serves as the foundation for the next process stage, logical data modeling.

Logical Data Modeling

Logical data modeling refines and extends the conceptual data model by adding more details about attributes, data types, and relationships. It is a more detailed representation of the data model that is independent of a specific database management system (DBMS) or technology. The primary goal of logical data modeling is to accurately define the structure and relationships between entities, while still maintaining a degree of abstraction from the actual implementation.

Components of Logical Data Modeling

The critical components of logical data modeling are:

- Entities, Attributes, and Relationships: These components retain their original meaning and purpose from the conceptual data model.

- Data Types: Assign specific data types to each attribute, defining the kind of information it can store, such as integers, strings, or dates.

- Constraints: Define rules or restrictions that must be met by the data stored in the attributes, such as uniqueness, referential integrity, or domain constraints.

Creating a Logical Data Model

Creating a logical data model involves several steps:

- Refine Entities, Attributes, and Relationships: Review and update the components carried over from the conceptual data model, ensuring that they accurately represent the intended business requirements. Look for opportunities to make the model more efficient, such as identifying reusable entities or attributes.

- Define Data Types and Constraints: Assign appropriate data types to each attribute and specify any constraints that must be applied to ensure data consistency and integrity.

- Normalize the Logical Data Model: Apply normalization techniques to eliminate redundancy and increase efficiency within the data model. Ensure that each entity and its attributes meet the requirements of the various normal forms (1NF, 2NF, 3NF, etc.).

After completing the logical data modeling process, the resulting model is ready for the final stage of physical data modeling.

Physical Data Modeling

Physical data modeling is the final step in the data modeling process, where the logical data model is translated into an actual implementation using a specific database management system (DBMS) and technology. It is the most detailed representation of the data model, containing all the necessary information for creating and managing database objects, such as tables, indexes, views, and constraints.

Components of Physical Data Modeling

The key components of physical data modeling include:

- Tables: Represent the actual storage structures for the entities in the data model, with each row in the table corresponding to an entity instance.

- Columns: Correspond to the attributes in the logical data model, specifying the data type, constraints, and other database-specific properties for each attribute.

- Indexes: Define additional structures that improve the speed and efficiency of data retrieval operations on tables.

- Foreign Keys and Constraints: Represent the relationships between tables, ensuring that referential integrity is maintained at the database level.

Creating a Physical Data Model

Creating a physical data model involves multiple steps:

- Choose a DBMS: Select a specific database management system (such as PostgreSQL, MySQL, or SQL Server) on which the physical data model will be implemented. This choice will determine the model's available features, data types, and constraints.

- Map Logical Entities to Tables: Create tables in the chosen DBMS to represent each entity in the logical data model and their attributes as columns in the table.

- Define Indexes and Constraints: Create any necessary indexes to optimize query performance and define foreign key constraints to enforce referential integrity between related tables.

- Generate Database Objects: Use a data modeling tool or manually write SQL scripts to create the actual database objects, such as tables, indexes, and constraints, based on the physical data model.

The physical data model produced in this final stage is not only a crucial document for the development and maintenance of the database but also serves as an important reference for other stakeholders, including business analysts, developers, and system administrators.

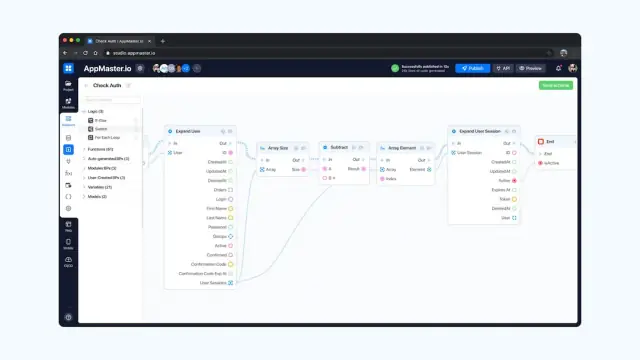

AppMaster, a powerful no-code platform, facilitates the easy transition from data modeling to implementation. By visually creating data models for backend applications, users can design database schemas, business logic using visually designed Business Processes, REST API, and WSS Endpoints. AppMaster generates source code for applications, allowing for quick implementation, seamless integration, and easy maintenance of your data models. Harness the power of AppMaster to simplify data modeling and turn your concepts into fully functional applications.

Normalization Techniques

Normalization is a systematic approach used in relational database design to organize data, reduce redundancy, and ensure data consistency. It simplifies the structure of the database and allows it to perform efficiently. The process involves decomposing a table into smaller, related tables while establishing proper relationships between them. In the normalization process, several normal forms (1NF, 2NF, 3NF, BCNF, 4NF, 5NF) are used as guidelines to achieve different levels of normalization.

First Normal Form (1NF)

The first step in normalization is achieving the First Normal Form (1NF), which enforces the following rules:

- Each table cell should contain a single value.

- All entries in a column must be of the same data type.

- Columns must have unique names.

- The order in which data is stored does not matter.

By adhering to 1NF, the database eliminates repeating groups and simplifies the table's structure.

Second Normal Form (2NF)

Second Normal Form (2NF) aims to remove partial dependencies. A table is in 2NF if:

- It is in 1NF.

- All non-key attributes are fully dependent on the primary key.

By achieving 2NF, the database ensures that all non-key attributes in a table are describing the entire primary key, thus removing partial dependencies and reducing redundancy.

Third Normal Form (3NF)

Third Normal Form (3NF) eliminates transitive dependencies. A table is in 3NF if:

- It is in 2NF.

- There are no transitive dependencies between non-key attributes.

By adhering to 3NF, the database design eliminates transitive dependencies and further reduces redundancy and inconsistencies.

Boyce-Codd Normal Form (BCNF)

Boyce-Codd Normal Form (BCNF) is a stronger version of 3NF that addresses certain anomalies that may not be covered by 3NF. A table is in BCNF if:

- It is in 3NF.

- For every non-trivial functional dependency, the determinant is a superkey.

BCNF further refines the data model by ensuring that all functional dependencies are strictly enforced and anomalies are eliminated.

Fourth Normal Form (4NF)

Fourth Normal Form (4NF) deals with multi-valued dependencies. A table is in 4NF if:

- It is in BCNF.

- There are no multi-valued dependencies.

By adhering to 4NF, the database design eliminates redundant information resulting from multi-valued dependencies, thus improving the efficiency of the database.

Fifth Normal Form (5NF)

Fifth Normal Form (5NF) deals with join dependencies. A table is in 5NF if:

- It is in 4NF.

- The superkeys of the table imply every join dependency in the table.

By achieving 5NF, the database design eliminates additional redundancy and ensures that the database can be reconstructed without loss of information.

Reverse Engineering of Data Models

Reverse engineering is the process of analyzing the existing structure of a database and generating the corresponding data models, typically for documentation or migration purposes. Reverse engineering can help to:

- Automatically generate data models for legacy systems, where original documentation may be lacking or outdated.

- Discover the hidden relationships and dependencies among various data elements in a database.

- Facilitate migration or integration of databases.

- Support documentation and understanding of complex systems.

Various data modeling tools offer reverse engineering capabilities, allowing you to connect to a database, extract the schema, and generate corresponding ER diagrams or other data models. Sometimes, you might need to manually refine the generated data models to accurately represent the underlying business requirements and simplify the database structure.

Data Modeling Tools

Data modeling tools offer a visual approach to designing database schemas and help to ensure data is organized and accessed efficiently. These tools come with various features, such as visual modeling, code generation, version control, and support for various database management systems. Some popular data modeling tools include:

ER/Studio

ER/Studio is a data modeling and architecture tool that provides powerful features for designing, documenting, and managing your data structures. It supports several databases, including Oracle, SQL Server, MySQL, and PostgreSQL. Key features include:

- Visual data modeling for conceptual, logical, and physical models.

- Support for team collaboration and version control.

- Forward and reverse engineering capabilities.

- Automated code generation for various programming languages.

PowerDesigner

PowerDesigner is a comprehensive data modeling and enterprise architecture solution, offering various features for designing and managing data structures across various platforms. Key features include:

- Support for multiple databases and modeling techniques, including entity-relationship, UML, XML, and BPMN.

- Forward and reverse engineering capabilities.

- Data movement modeling for tracking and optimizing data flows.

- Impact analysis and change management to manage changes across multiple layers of the IT architecture.

ERwin Data Modeler

ERwin Data Modeler is another widely used data modeling tool, providing features to create, maintain, and manage complex data structures. Key features include:

- Support for different types of databases, such as SQL Server, Oracle, MySQL, and more.

- Visual data modeling for conceptual, logical, and physical data models.

- Automated code generation for SQL, DDL, and other programming languages.

- Forward and reverse engineering capabilities.

- Centralized model management for collaboration, version control, and security.

Choosing the right data modeling tool depends on the specific needs of your project, such as the size and complexity of your data structures, the database management systems you use, and the level of collaboration you require. Be sure to consider these factors when evaluating different tools to make the best decision for your organization.

Implementing Data Models with AppMaster

AppMaster, a powerful no-code platform, simplifies the process of implementing data models for your backend, web, and mobile applications. It allows you to design database schemas, create business logic using visually designed Business Processes, and intuitively generate REST API and WSS Endpoints. By leveraging AppMaster for your data modeling needs, you can streamline your application development process and minimize the time and effort required to bring your ideas to life.

Visual Data Modeling

With AppMaster's visual data modeling tools, you can easily design your data models by dragging and dropping elements onto the canvas. Define entities with their respective attributes, specify relationships, and constraints between them. AppMaster supports a wide range of data types, allowing you to create complex and sophisticated data models easily.

Backend Applications and Business Processes

Once your data model is in place, AppMaster can generate backend applications using the powerful Go (golang) programming language. These applications are highly efficient and can handle enterprise-scale highload use-cases. AppMaster's Business Process Designer lets you visually create the business logic associated with your data model. By defining workflows, rules, and actions using the drag-and-drop interface, you can quickly develop your application's core functionality without the need for manual coding.

REST API and WSS Endpoints

AppMaster automatically generates REST API and WSS Endpoints for your data models, enabling smooth communication between your applications and the database. These endpoints follow the OpenAPI specification, ensuring compatibility with various front-end frameworks and third-party applications. The platform also generates Swagger documentation to help you explore, test, and manage the API efficiently.

Source Code Generation and Deployment

AppMaster generates the source code for your applications, providing you with a solid foundation to build upon. With the Enterprise subscription, you can access the complete source code of your applications and deploy them on-premises. The generated applications use Vue3 framework for web applications and Kotlin with Jetpack Compose for Android and SwiftUI for iOS applications, ensuring high performance and compatibility.

Eliminating Technical Debt

One of the unique advantages of using AppMaster is the elimination of technical debt. AppMaster regenerates applications from scratch every time changes are made to the blueprints. This approach ensures that your applications are always up-to-date with the latest design principles and best practices, significantly reducing the complexity and cost of maintaining your applications in the long run.

Conclusion

Data modeling in RDBMS is a crucial component of the application development process. Understanding the different types of data models, and the techniques and methodologies involved in their creation and implementation can lead to a more efficient and effective database design process. With AppMaster's intuitive no-code platform, you can visually design and deploy data models, backend, web, and mobile applications, enabling rapid application development, reduced maintenance costs, and the elimination of technical debt. By leveraging the power of AppMaster, developers and businesses can bring their ideas to life more quickly and efficiently, resulting in a competitive advantage in today's tech industry.

FAQ

Data modeling is the process of creating a visual representation of an organization's data and how it is structured, stored and accessed. It helps in understanding the relationships between different data entities and the rules governing their use.

There are three main types of data models: conceptual, logical, and physical. The conceptual model represents the high-level view of the entities and relationships, while the logical model refines the conceptual model by adding attributes and defining relationships, and the physical model specifies the actual implementation details in a specific database management system (DBMS).

Conceptual data modeling is the process of creating a high-level, abstract representation of an organization's data. It involves identifying the key data entities, their attributes, and the relationships between them. This model serves as a foundation for logical and physical data modeling, ensures a clear understanding of the business requirements, and guides the database design process.

Normalization techniques are used in database design to reduce data redundancy and improve the efficiency and consistency of data storage. The process involves organizing data into tables, eliminating duplicate information, and establishing relationships between the tables. Several normal forms (1NF, 2NF, 3NF, etc.) are used as guidelines to achieve different levels of normalization.

Some popular data modeling tools include ER/Studio, PowerDesigner, and ERwin Data Modeler. These tools offer features like visual data modeling, code generation, and support for a wide range of database management systems.

AppMaster, a powerful no-code platform, allows users to visually create data models for backend applications. Users can design database schemas, business logic using visually designed Business Processes, REST API and WSS Endpoints. AppMaster generates source code for applications, enabling quick implementation and easy maintenance of data models.