ChatGPT's Rise Spurs Debates on Plagiarism, Hallucination, and Transparency in AI

As OpenAI's ChatGPT gains traction, new tools such as GPTZero and Got It AI's truth-checker are being developed to counteract plagiarism and hallucination.

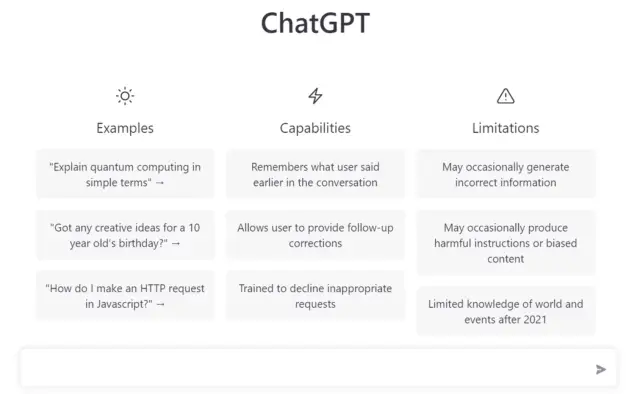

The rapid ascent of OpenAI's ChatGPT into the AI spotlight in just seven weeks has elevated discussions around the technology's potential for causing plagiarism, hallucination, and the subsequent impact on academia. As an AI language model adept at generating highly persuasive textual responses, ChatGPT demonstrates a notable capability to rap, rhyme, solve complex math problems, and even write computer code.

Stephen Marche's article, The College Essay Is Dead, underlines the unpreparedness of academia for how AI will transform the educational landscape. However, initial steps against ChatGPT's plagiarism threat have already been taken in Seattle and New York City public school districts. Additionally, technological advancements are providing the ability to detect generative AI usage.

Over the New Year, Princeton University computer science major Edward Tian developed GPTZero, an app designed to efficiently detect whether a given piece of text is written by ChatGPT or a human. GPTZero analyzes two text characteristics: perplexity and burstiness. Tian discovered that ChatGPT generates less complex and more consistently-lengthed texts than human literature.

While not perfect, GPTZero shows promising results. Tian is in discussions with school boards and scholarship funds to supply GPTZeroX to 300,000 schools and funding bodies. Alongside GPTZero, developers are creating tools to combat hallucinations, another issue arising from ChatGPT's prominence.

Peter Relan of Got It AI, a company specializing in custom conversational AI solutions, highlights that large language models like ChatGPT will inevitably produce hallucinations. ChatGPT's hallucination rate currently stands at 15% to 20%, but detecting when the model is hallucinating will lead to delivering more accurate responses.

Last week, Got It AI announced a private preview for a new truth-checking component of Autonomous Articlebot, which uses a large language model trained to identify untruths told by ChatGPT and other models. This truth-checker currently boasts an accuracy rate of 90%, effectively raising ChatGPT's overall accuracy rate to 98%.

While a complete elimination of hallucinations from conversational AI systems is unrealistic, minimizing their occurrences is plausible. OpenAI, the maker of ChatGPT, has not yet released an API for the large language model. However, the underlying model, GPT-3, has an API available. Got It AI's truth-checker can be used with the latest GPT-3 release, davinci-003.

Relan believes OpenAI will address the core platform's tendency to hallucinate, with a notable improvement already seen in reducing ChatGPT's error rate. The overarching challenge for OpenAI is to decrease its hallucination rate over time.

With tools like GPTZero and Got It AI's truth-checker, the threat of AI in plagiarism and hallucination may be mitigated. As advancements are made in AI transparency and accuracy, platforms such as AppMaster and others continue to aid in accelerating the development of backend, web, and mobile applications for a wide range of users, from small businesses to enterprises.