Database Design Basics

Learn about data modeling, normalization, relationships, and more to create efficient databases.

Database design is the process of creating a structured plan for organizing, storing, and managing data to ensure data integrity, consistency, and efficiency. A well-designed database reduces data redundancy, promotes reusability, and simplifies data management. Designing a good database involves using best practices and techniques, such as data modeling, normalization, and entity relationship modeling.

High-quality database design is crucial for businesses and organizations that want to utilize their data effectively, improve decision-making, reduce costs, and increase efficiency. Following this article's principles and best practices, you will be better equipped to create well-organized, and efficient databases.

The Data Modeling Process

Data modeling is creating a graphical representation of the database's structure, defining entities, attributes, and relationships to represent real-world scenarios accurately. The data model serves as a blueprint for the database's physical and logical design. The process typically involves the following steps:

- Requirement Analysis: Identifying and gathering stakeholder requirements and understanding the system's purpose and goals.

- Conceptual Data Model: A high-level model that represents the main entities, attributes, and relationships without addressing the details of the database structure. This technology-agnostic model focuses on the structure of the data to be stored.

- Logical Data Model: A detailed model that further expands on the conceptual data model, specifying all required entities, attributes, relationships, and constraints in a structured format. This model paves the way for the database's physical design.

- Implementing the Physical Data Model: Using the logical data model as a guide, the database is created and populated with data by defining tables, indexes, and other database objects.

By following these steps, you can create a solid foundation for your database and ensure that it accurately reflects the needs and requirements of your organization.

Types of Database Models

There are several database models, each with advantages and disadvantages. Understanding the different models can help you select the best-suited architecture for your database. Here are some of the most widely used database models:

Relational Database Model

Developed in the early 1970s, the relational database model is the most commonly used model today. In this model, data is stored in tables with rows and columns, representing records and attributes respectively. The tables are related to each other through primary and foreign keys, which establish relationships between records in different tables. The relational model's primary advantages are its flexibility, ease of use, and straightforward implementation. Standard Query Language (SQL) is typically used to manage, maintain, and query relational databases, making it easy to learn and versatile. Examples of relational database management systems (RDBMS) include Oracle, MySQL, MS SQL Server, and PostgreSQL.

Hierarchical Database Model

The hierarchical database model represents data in a tree-like structure, with nodes establishing parent-child relationships. In this model, each child node has only one parent, while parent nodes can have multiple children. The model is typically used for simple database designs where data has a clear hierarchical relationship. Still, the hierarchical model can become cumbersome and inflexible when complex relationships exist between entities, making it unsuitable for databases with multiple many-to-many relationships. Examples of hierarchical database management systems include IBM's Information Management System (IMS) and Windows Registry.

Network Database Model

The network database model was created in response to the hierarchical model's limitations, allowing more complex relationships between records than the hierarchical model. In this model, records (called nodes) are connected to other nodes via pointers, establishing relationships using a set-oriented approach. This model's flexibility allows it to represent complex relationships and multiple record types, making it suitable for various applications. But the network model requires more complex database management and may pose a steeper learning curve. An example of a network database management system is Integrated Data Store (IDS).

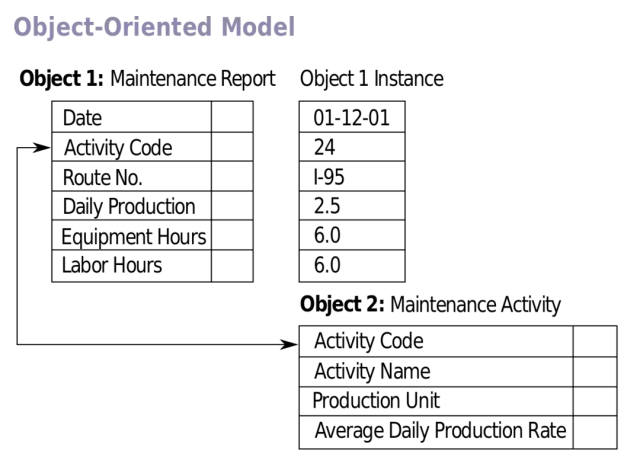

Object-Oriented Database Model

The object-oriented database model is a relatively newer model that stores data in the form of objects, which can have attributes and methods, similar to object-oriented programming. In this model, data can be represented as complex data types, such as images and multimedia, making it suitable for modern applications. The primary advantage of the object-oriented model is its compatibility with object-oriented programming languages, allowing developers to work more seamlessly with databases. Still, the model's complexity and the need for specialized object-oriented database management systems can be challenging.

Examples of object-oriented database management systems include ObjectStore and ObjectDB.

Image Source: Wikipedia

Understanding the various database models available is essential to selecting the appropriate model for your specific use case and requirements. The relational database model remains the most popular choice due to its flexibility and widespread support, but alternative models may be better for specific requirements. It is crucial to assess your database needs and consider the trade-offs associated with each model before deciding.

Normalization: Getting Rid of Redundancies

Normalization is a crucial step in the process of database design. Its primary goal is to organize the data efficiently and eliminate redundancies by distributing data across multiple tables. This process ensures that the relationships between these tables are properly defined, and the database maintains consistency and integrity throughout its lifetime. Normalization follows a series of progressive normal forms (1NF, 2NF, 3NF, BCNF, 4NF, and 5NF), which serve as guidelines for organizing data in a relational database. Each normal form builds upon the previous, adding new requirements and advancing the database's organization.

First Normal Form (1NF)

The first normal form requires that each attribute (column) of a table contains only atomic values, and each entry (row) is unique. In other words, a table should have no repeating groups or multi-valued fields. By ensuring the atomicity of values, you eliminate duplicate data and ensure each attribute represents a single fact about the entity.

Second Normal Form (2NF)

A table is considered to be in the second normal form once it meets the criteria for 1NF and each non-primary key attribute is fully functionally dependent on the primary key. In this stage, partial dependencies are removed by decomposing tables into multiple related tables. This ensures that each non-primary attribute depends only on the primary key and not on any other non-key attribute.

Third Normal Form (3NF)

For a table to be in the third normal form, it must first satisfy 2NF. In addition, 3NF requires that there are no transitive dependencies for non-primary key attributes. This means that non-key attributes must not depend on other non-key attributes, either directly or indirectly. To achieve 3NF, you may need to further decompose your tables to remove these dependencies.

Boyce-Codd Normal Form (BCNF)

Boyce-Codd Normal Form is a stronger version of the Third Normal Form. A table is considered to be in BCNF if, for every functional dependency X → Y, X is a superkey. In simpler terms, BCNF states that every determinant must be a candidate key. While 3NF can still allow some anomalies, BCNF further strengthens the requirements to ensure more data integrity.

Fourth Normal Form (4NF) and Fifth Normal Form (5NF)

These two advanced normal forms are seldom used in practice; nevertheless, they exist to address specific multi-valued dependencies and join dependencies that may still exist in the database schema. They help further refine the data organization, but their implementation is quite complex and may not be necessary for most database systems.

Remember that normalization is not always about achieving the highest normal form possible. In some cases, due to performance considerations or specific application requirements, some denormalization may be beneficial. Always balance the benefits of normalization with the potential drawbacks, such as increased complexity and join operations.

Entity Relationship Modeling

Entity Relationship Modeling (ERM) is a vital technique in database design. It involves creating a graphical representation of the entities, their attributes, and the relationships between them to represent real-world scenarios accurately. An Entity Relationship Diagram (ERD) is a visual model of the database, which illustrates the structure and connections between the entities and their relationships. Entities represent objects or concepts that have relevance to the system being developed, while attributes are characteristics that describe those entities. Relationships show how entities are interconnected and interact with one another. There are three main components of ERDs:

- Entities: Rectangles represent entities, which are objects or concepts of importance to the system.

- Attributes: Ovals represent attributes, which describe the properties of entities.

- Relationships: Diamonds represent relationships between entities, indicating how they are connected and interact with one another.

To create an Entity Relationship Diagram, you should follow these steps:

- Identify the entities relevant to the system, such as person, product, or order.

- Determine the attributes that describe each entity, such as name, age, or price.

- Define primary keys for each entity to uniquely identify its instances.

- Establish relationships between entities, such as one-to-many, many-to-many, or one-to-one.

- Specify cardinality and optionality constraints for each relationship, indicating the minimum and maximum number of occurrences for each entity in the relationship.

Performing Entity Relationship Modeling helps developers better understand the database structure, which aids in the subsequent processes of database design, such as normalization, table creation, and index management.

Creating Tables: Defining Data Types and Constraints

Once you have modeled your database using Entity Relationship Diagrams and achieved the desired level of normalization, the next step is to transform the ER model into an actual database schema by creating tables, defining data types, and setting constraints.

Creating Tables

For each entity in the ERD, create a table. Then, for each attribute of the entity, create a corresponding column in the table. Ensure you define primary, foreign, and data types for each column to accurately represent the data.

Defining Data Types

Assign a data type to each column based on the type of data it represents. Appropriate data types ensure that the data is stored and managed accurately. Some common data types include:

- Integer: Whole numbers, such as age, quantity, or ID.

- Decimal or Float: Decimal numbers, such as price or weight.

- Char or Varchar: Strings and text, such as names, addresses, or descriptions.

- Date or Time: Date and time values, such as birthdate or order timestamp.

- Boolean: True or false values, representing binary states, such as activated/deactivated.

Setting Constraints

Constraints are rules enforced on columns to maintain data integrity. They ensure that only valid data is entered into the database and prevent situations that could lead to inconsistencies. Some common constraints include:

- Primary Key: Uniquely identifies each row in a table. Cannot contain NULL values, and must be unique across all rows.

- Foreign Key: Refers to the primary key of another table, ensuring referential integrity between related tables.

- Unique: Ensures that each value in the column is unique across all rows, such as usernames or email addresses.

- Check: Validates that the data entered into a column adheres to a specific rule or condition, such as a minimum or maximum range.

- Not Null: Ensures that the column cannot contain NULL values and must have a value for each row.

Considering the complexities of creating and managing database tables, constraints, and data types, utilizing a no-code platform like AppMaster can significantly simplify this process. With AppMaster's visual tools, you can design data models and define database schema more efficiently while maintaining data integrity and consistency.

Database Indexes for Improved Performance

Database indexes are essential for improving the performance of data retrieval operations in a database. By providing a fast access path to the desired data, indexes can significantly reduce the time it takes to query the database. This section will help you understand the concept of database indexes and how to create and maintain them effectively.

What Are Database Indexes?

A database index is a data structure that maintains a sorted list of the values for specific columns in a table. This structure enables the database management system to locate records more efficiently, as it avoids doing full table scans, which can be time-consuming, especially for large datasets. In short, a database index can be compared to the index in a book, which helps you find a specific topic faster without scanning all the pages. But indexes come with trade-offs. While they can considerably improve read operations, they may hurt write operations like insertions, deletions, and updates. This is because the database needs to maintain the index structure every time a change occurs in the indexed columns.

Types of Database Indexes

There are several types of indexes available to optimize database performance. The most common ones include:

- Single-column index: An index created on a single column.

- Composite index: An index built on multiple columns, also known as a concatenated or multi-column index.

- Clustered Index: An index that determines the physical order of data storage in a table. In this case, the table records and the index structure are stored together.

- Non-clustered Index: An index that doesn't affect the physical order of data storage. Instead, it creates a separate data structure that holds a pointer to the actual data row.

To decide which index type suits your specific use-case, consider query performance, disk space, and maintenance factors.

Creating and Optimizing Indexes

To create an index, you must first identify the frequently used columns in queries and analyze the existing query patterns. This helps you define the appropriate indexes to optimize the performance of the database. When creating indexes, consider the following best practices:

- Limit the number of indexes per table to avoid performance issues during write operations.

- Use a composite index on columns frequently used together in a query.

- Choose the appropriate index type based on the specific requirements of your database.

- Regularly monitor and maintain the indexes to ensure optimal performance.

Designing for Scalability and Performance

Database scalability and performance are key aspects to consider during the design process. Scalability refers to the ability of a database system to manage an increased workload and adapt to growing data storage requirements while maintaining optimal performance. This section'll explore various strategies and techniques to design scalable and high-performing databases.

Vertical and Horizontal Scaling

There are two main approaches to scaling a database: vertical scaling and horizontal scaling.

- Vertical scaling: Also known as scaling up, vertical scaling involves increasing the capacity of a single server by adding resources, such as more CPU, memory, or storage. This approach can provide immediate improvement in performance, but it has limitations in terms of maximum server capacity, costs, and potential single points of failure.

- Horizontal scaling: Also known as scaling out, horizontal scaling distributes the workload across multiple servers or partitions, which can work independently or together. This approach enables more exceptional scalability, flexibility and can provide improved fault tolerance.

In general, combining both vertical and horizontal scaling strategies can help you balance performance, scalability, and cost.

Database Sharding

Database sharding is a technique used in horizontal scaling by partitioning the data across multiple servers. Sharding involves splitting a large dataset into smaller subsets called shards, which are distributed across the servers while still maintaining the integrity of the data. There are several sharding strategies, such as range-based sharding, hash-based sharding, and list-based sharding. Choose an appropriate sharding strategy based on the data distribution, consistency requirements, and the type of queries in your application.

Database Caching

Database caching can significantly reduce latency and improve performance by storing frequently accessed data in memory or external caching systems. This way, when a client requests the data, it can be retrieved more quickly from the cache without the need for querying the database. Popular caching solutions include in-memory databases like Redis and distributed caching systems like Memcached. Implementing caching properly can help you improve response times and reduce the load on the backend database.

Monitoring and Optimizing Performance

Regularly monitoring and analyzing the performance of your database is essential for ensuring scalability and optimal performance. Collect performance metrics, identify bottlenecks, and apply optimizations, such as query optimization, adding or removing indexes, adjusting configurations, and updating hardware resources as needed.

Database Security: Ensuring Safe Data Storage

With the growing importance of data protection, database security has become a critical aspect of database design. Ensuring your database is safe from unauthorized access, data breaches, and other security threats is essential. This section'll discuss some best practices to secure your database and protect sensitive data.

Access Control

Implementing proper access control is the first line of defense against unauthorized access to your database. Create user accounts with appropriate permissions and restrict access based on the principle of least privilege, which means granting only the minimum access necessary for each user to perform their tasks. Ensure you have strong password policies and use multi-factor authentication to prevent unauthorized access through compromised credentials.

Data Encryption

Data encryption is a crucial technique for protecting sensitive data, both when stored in the database (at rest) and while being transmitted over the network (in transit). Use strong encryption methods, such as AES, and manage encryption keys securely.

Monitoring and Auditing

Regularly monitoring and auditing database activity helps you detect unauthorized access attempts, policy violations, and potential threats. Implement a logging system to record database events for later review and analysis. Investigate any suspicious activity and take appropriate action to prevent data breaches.

Software Updates

Keeping your database management system (DBMS) and other related software up-to-date is critical for addressing security vulnerabilities and maintaining a secure environment. Regularly apply patches and updates, and follow the recommendations provided by the software vendors.

Backup and Disaster Recovery

Regularly back up your database and have a disaster recovery plan in place to mitigate the risks associated with hardware failures, data corruption, or other catastrophic events. Test your backup and recovery procedures to ensure you can restore the database quickly in an emergency. When designing a database, keeping security at the forefront of your decision-making process is essential to protect sensitive data and maintain the trust of your users.

By implementing the best practices mentioned above, you can create a secure database that can withstand security threats and vulnerabilities.

Understanding the basics of database design, including data modeling, normalization, entity relationship modeling, and creating tables, is essential for creating efficient and effective databases. By focusing on scalability, performance, and security, you can design databases that meet the needs of your application, users, and organization. No-code platforms like AppMaster simplify the database design process by providing an intuitive interface to create data models and define database schemas, empowering developers to focus on solving business problems while ensuring the quality and performance of the underlying database infrastructure.

Conclusion: Database Design Best Practices

Proper database design is crucial for creating efficient, maintainable, and scalable systems that store and manage data effectively. By following best practices in database design, you can ensure that your database will be well-structured, responsive, and secure. Here's a summary of key best practices for database design:

- Clear data modeling: Develop a clear understanding of your data and its relationships by creating a data model that accurately represents the real-world scenario. Use appropriate database modeling techniques such as ER diagrams to visualize and organize the data.

- Choose the right database model: Select the one that best aligns with your application's requirements and optimizes its performance. Relational databases are the most commonly used, but other types like hierarchical, network, or object-oriented databases may be more suitable for specific use cases.

- Normalize your database: Apply normalization principles to eliminate redundancies, reduce anomalies, and maintain data integrity. Normalize your database up to the appropriate normal form based on the specific needs of your application.

- Create meaningful and consistent naming conventions: Employ clear and consistent naming conventions for entities, attributes, and relationships to facilitate better understanding and maintainability of the database.

- Define data types and constraints: Select the appropriate attribute data types and apply necessary constraints to ensure data integrity and consistency.

- Optimize database indexes: Use indexes wisely to speed up data retrieval operations without compromising insert and update performance. Index frequently queried columns or those used in WHERE and JOIN clauses.

- Design for scalability and performance: Plan for future growth and increased workload by creating a database design that supports horizontal and vertical scalability. Optimize the database structure, queries, and indexing strategies for high performance.

- Ensure database security: Safeguard your database by implementing proper access control, encryption, auditing, and monitoring measures. Keep your software up-to-date to protect against known vulnerabilities.

- Leverage no-code and low-code tools: Utilize no-code and low-code platforms like AppMaster to streamline and simplify the database design process. These tools can help you create data models, define database schema, and even generate the necessary code for efficient database operations.

By adhering to these database design best practices and applying the knowledge gained from this article, you can create efficient and secure databases that effectively store and manage your valuable data, contributing to the success of your applications and business projects.

FAQ

Database design is the process of creating a structured plan for organizing, storing, and managing data in a way that ensures data integrity, consistency, and efficiency.

Data modeling is the process of creating a graphical representation of the database structure, defining entities, attributes, and relationships to accurately represent real-world scenarios.

The primary types of database models are relational, hierarchical, network, and object-oriented database models, with relational models being the most commonly used.

Normalization is the process of organizing data in a database to reduce redundancy, eliminate anomalies, and ensure data integrity, following a series of progressive normal forms.

An entity relationship diagram (ERD) is a visual representation of the entities, attributes, and relationships in a database, helping developers understand the structure and connections in the database.

Database indexes are data structures that improve the speed of data retrieval operations by providing a fast access path to the desired data in the database.

Best practices for ensuring database security include proper access control, applying encryption, regular monitoring and auditing, and keeping software up-to-date.

Database scalability refers to the ability of a database system to manage increased workload and adapt to growing data storage requirements while maintaining optimal performance.

No-code platforms like AppMaster simplify database design by allowing users to create data models and define database schema visually, generating the necessary code for efficient and effective database operations.