Working with Databases in Go

Master the essentials of working with databases in Go, including popular libraries, techniques, and best practices for efficient and secure database management.

Introduction to Go and Databases

Go, also known as Golang, is an open-source programming language developed by Google that emphasizes simplicity, efficiency, and strong support for concurrent programming. Go's design philosophy and powerful standard library make it an excellent choice for developing modern web applications, APIs, and microservices that need to interact with databases.

Working with databases in Go presents numerous benefits, such as improved performance, secure connections, and a straightforward approach to managing connections and executing queries. In this article, we will start by covering how to connect Go with PostgreSQL, followed by an overview of the 'database/sql' package, which is Go's standard package for working with databases.

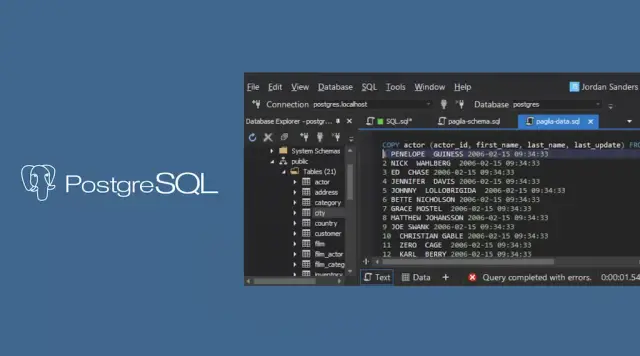

Connecting Go with PostgreSQL

PostgreSQL is a powerful, open-source object-relational database system that offers a wide range of advanced features, such as ACID compliance, support for concurrent transactions, and a highly extensible architecture. Go can connect to PostgreSQL databases easily with the help of a few libraries.

To connect Go with PostgreSQL, you will need to use the built-in 'database/sql' package along with a PostgreSQL driver that handles communication between Go and the database. The 'database/sql' package provides a generic, database-agnostic interface for working with databases, and the PostgreSQL driver handles the specific implementation details required for PostgreSQL.

One popular PostgreSQL driver for Go is 'pq'. To get started, you will need to install the 'pq' driver:

go get github.com/lib/pq

Next, import the 'database/sql' and 'pq' packages into your Go application:

import (

"database/sql"

_ "github.com/lib/pq"

)

Note that we use the blank identifier (_) to import the 'pq' package to avoid an "unused import" error since we only need it for its side effects (registering the driver with 'database/sql').

Now, you can establish a connection to your PostgreSQL database by calling the 'sql.Open' function with the appropriate connection string:

db, err := sql.Open("postgres", "user=username password=password host=localhost dbname=mydb sslmode=disable")

if err != nil {

log.Fatalf("Error: Unable to connect to database: %v", err)

}

defer db.Close()

In the example above, we provide the 'sql.Open' function with a connection string containing the necessary information to connect to the PostgreSQL database. Make sure to replace 'username', 'password', and 'mydb' with the appropriate values for your database. The 'sslmode=disable' parameter disables SSL for the connection; for production use, you should consider enabling SSL for secure communication.

Using the 'database/sql' Package

'database/sql' is the standard package in Go for working with SQL databases. The package provides a simple, abstract interface to interact with databases, making it easy to write portable and maintainable code. Here's a brief overview of how to perform various tasks using the 'database/sql' package.

Executing Queries

Once you have connected to your database, you can execute SQL queries using the 'db.Query' and 'db.QueryRow' methods. The 'db.Query' method returns a 'sql.Rows' value that you can iterate over to retrieve the query results:

rows, err := db.Query("SELECT id, name FROM users")

if err != nil {

log.Fatalf("Error: Unable to execute query: %v", err)

}

defer rows.Close()

for rows.Next() {

var id int64

var name string

rows.Scan(&id, &name)

fmt.Printf("User ID: %d, Name: %s\n", id, name)

}

If you only expect a single row in the result, you can use the 'db.QueryRow' method. The method returns a 'sql.Row' value that you can scan directly:

var id int64

var name string

row := db.QueryRow("SELECT id, name FROM users WHERE id = $1", userID)

if err := row.Scan(&id, &name); err == sql.ErrNoRows {

fmt.Println("User not found")

} else if err != nil {

log.Fatalf("Error: Unable to execute query: %v", err)

} else {

fmt.Printf("User ID: %d, Name: %s\n", id, name)

}

Executing Updates and Inserts

To execute update or insert statements, you can use the 'db.Exec' method. The 'db.Exec' method takes an SQL statement and returns a 'sql.Result' value along with any errors:

result, err := db.Exec("UPDATE users SET email = $1 WHERE id = $2", email, userID)

if err != nil {

log.Fatalf("Error: Unable to execute update: %v", err)

}

affectedRows, _ := result.RowsAffected()

fmt.Printf("Updated %d rows\n", affectedRows)

The 'sql.Result' value provides additional information about the update or insert operation, such as the number of affected rows or the last inserted ID.

The 'database/sql' package offers a wide range of functionalities for working with databases in Go, including prepared statements, transactions, and more. While it may not provide the most expressive query capabilities or advanced features offered by some third-party libraries, it remains a foundational package for working with databases in Go.

Utilizing Third-Party Libraries

While the standard 'database/sql' package provides essential functionality for interacting with databases, third-party libraries can offer additional features, improved performance, and more expressive query capabilities. In this section, we'll discuss some popular third-party libraries for working with databases in Go and their benefits:

- GORM: GORM (Go Object-Relational Mapper) is an ORM library for Go that offers a simple and convenient way to interact with databases. GORM supports various SQL databases, including PostgreSQL, MySQL, SQLite, and Microsoft SQL Server. With GORM, you can write expressive and type-safe queries, automate schema migrations, and manage database transactions. GORM also provides support for preloading, querying, and updating related records in different tables using struct tags.

- sqlx: sqlx is an extension to the 'database/sql' package that provides more advanced features and better integration with Go's idiomatic practices. sqlx can handle complex query scenarios with ease, allowing you to map query results to Go structs, perform bulk inserts, and deserialize JSON data types directly into custom Go types. Additionally, sqlx offers a powerful query builder for constructing and executing SQL queries, along with utilities for working with transactions and prepared statements.

- pgx: pgx is a pure-Go PostgreSQL driver and toolkit that aims to offer high-performance and full PostgreSQL feature support. In comparison to the 'database/sql' package and 'pq,' pgx provides better performance, more control over connection settings, and support for a wider range of PostgreSQL features. With pgx, you can take advantage of PostgreSQL's advanced features such as JSON data types, listen/notify, binary column values, and large object storage.

It's important to consider your project requirements, performance goals, and complexity when selecting a third-party library for interacting with databases in Go. Each library comes with its unique strengths and trade-offs, so make sure to evaluate the documentation, community support, and real-world use cases to make an informed decision.

Concurrency and Connection Pooling

One of the strengths of Go is its concurrency model, built on top of goroutines and channels. This model allows for efficient handling of multiple concurrent tasks, making it an ideal choice for web applications, APIs, and microservices handling multiple database connections. To benefit from Go's concurrency capabilities, proper connection pooling and concurrency management are essential.

Connection pooling is the process of managing a pool of open database connections that are shared and reused among multiple concurrent clients. By using connection pooling, you can improve the performance and scalability of your applications, as opening and closing connections with every request is both time-consuming and resource-intensive.

The 'database/sql' package provides built-in connection pooling by default. When using 'database/sql,' you can configure connection pool properties, such as the maximum number of open connections, maximum idle connections, and connection expiration, to optimize performance and minimize resource usage:

db, err := sql.Open("postgres", "user=pqtest dbname=pqtest sslmode=verify-full")

if err != nil {

log.Fatal(err)

}

// Set the maximum number of open connections

db.SetMaxOpenConns(100)

// Set the maximum number of idle connections

db.SetMaxIdleConns(25)

// Set the connection expiration time

db.SetConnMaxLifetime(5 * time.Minute)

It's important to note that connection pooling settings should be tuned according to the specific requirements of your application, taking into account factors like server resources, database performance, and expected request load.

Managing Transactions

Transactions are a crucial concept in database management systems, allowing developers to maintain data integrity and consistency by grouping together a series of operations. Transactions follow the ACID properties (Atomicity, Consistency, Isolation, and Durability), ensuring that the operations either succeed or fail as a whole.

In Go, you can use the 'database/sql' package to manage transactions. To start a new transaction, call the 'Begin' method on your database connection:

tx, err := db.Begin()

if err != nil {

log.Fatal(err)

}

Once you have a transaction object, you can use 'Exec' or 'Query' methods on it, just like you would with a regular database connection, to perform operations within the transaction:

_, err = tx.Exec("UPDATE users SET balance = balance - 100 WHERE id = 1")

if err != nil {

// Rollback the transaction if an error occurs

tx.Rollback()

log.Fatal(err)

}

_, err = tx.Exec("INSERT INTO transactions (user_id, amount) VALUES (1, -100)")

if err != nil {

// Rollback the transaction if an error occurs

tx.Rollback()

log.Fatal(err)

}

To commit the transaction and persist changes to the database, call the 'Commit' method:

err = tx.Commit()

if err != nil {

log.Fatal(err)

}

In case of errors, you should use the 'Rollback' method to cancel the transaction and revert any changes made within it:

err = tx.Rollback()

if err != nil {

log.Fatal(err)

}

Managing transactions is crucial in scenarios where data consistency and integrity need to be maintained, such as financial systems or multi-step processes. Carefully consider when to use transactions in your Go applications and follow best practices to ensure your application's stability and data reliability.

Finally, it's worth mentioning that platforms like AppMaster provide built-in support for transactions and concurrency in their generated applications, ensuring that your applications benefit from strong performance and reliable data management.

Handling Errors and Monitoring Performance

Managing errors and monitoring performance are crucial aspects of working with databases in Go. In this section, we'll explore error handling mechanisms and some performance monitoring tools to optimize your Go database code and identify potential issues.

Error Handling in Go

Go uses a simple error handling pattern based on the error interface. Functions that can produce an error return a value of the error type as their last return value. To handle errors, you can use the idiomatic if err != nil pattern.

When working with databases, various errors could occur, such as connection issues, invalid queries, or conflicts during transactions. It's essential to handle these errors gracefully and provide appropriate feedback or perform necessary actions depending on the error type.

rows, err := db.Query("SELECT * FROM users")

if err != nil {

log.Printf("Error querying users: %v\n", err)

return

}

defer rows.Close()

for rows.Next() {

var user User

err := rows.Scan(&user.ID, &user.Name, &user.Email)

if err != nil {

log.Printf("Error scanning user: %v\n", err)

continue

}

fmt.Printf("User: %v\n", user)

}

if err := rows.Err(); err != nil {

log.Printf("Error iterating users: %v\n", err)

}

In the example above, we handle errors at various stages: querying the database, scanning row data, and iterating through rows. Proper error handling is crucial for ensuring the reliability and stability of your Go database code.

Performance Monitoring Tools

Optimizing performance and identifying potential bottlenecks in your Go database code can provide significant benefits, especially when dealing with large-scale applications. Some of the common tools for performance monitoring in Go include:

-

pprof: Go includes the built-in

pprofpackage for profiling and diagnosing performance issues in your code. It offers CPU profiling, memory profiling, and several visualization options to help identify bottlenecks and optimize your database interactions.import ( "net/http" _ "net/http/pprof" ) func main() { go func() { log.Println(http.ListenAndServe("localhost:6060", nil)) }() // Your database code here } -

Go Benchmarks: You can create benchmark tests using the builtin

testingpackage to measure the performance of your database operations. These benchmarks can help you evaluate the efficiency of different query strategies or database libraries.func BenchmarkQuery(b *testing.B) { db := setupDatabase() defer db.Close() b.ResetTimer() for i := 0; i < b.N; i++ { _, err := db.Query("SELECT * FROM users") if err != nil { b.Fatal(err) } } } -

Third-party libraries: Several third-party libraries, such as DataDog or Prometheus, offer advanced monitoring solutions for Go applications. These libraries provide more extensive insights and integration options to help monitor your Go database code performance and resource usage.

Securing Database Connections

Securing your database connections is an essential step in any Go application development process. Proper security practices ensure that sensitive data is protected from unauthorized access or malicious activities. In this section, we'll explore some methods to secure your database connections in Go.

Encrypt Connections with SSL/TLS

Using encrypted connections with SSL/TLS is critical for keeping your data secure during transmission between your Go application and your database. When connecting to a PostgreSQL database, you can configure SSL/TLS settings using the pq driver:

db, err := sql.Open("postgres", "user=admin password=mysecretpassword dbname=mydb sslmode=require")

if err != nil {

log.Fatal("Failed to open a database connection: ", err)

}

defer db.Close()

In the example above, we set sslmode to require to force encrypted connections. You can use other values, such as prefer or verify-full, depending on your security requirements.

Use Authentication Mechanisms

Implementing proper authentication mechanisms, such as secure password storage and hashing, helps ensure that only authorized users have access to your database. When working with Go and databases, consider the following best practices:

- Never store plaintext passwords in your database. Instead, use a strong cryptographic hash function like bcrypt or scrypt to hash passwords before storing them.

- Use role-based access control to grant the least amount of privileges necessary for users to perform their tasks.

- Don't hard-code credentials or sensitive information in your application code. Use environment variables or configuration files to store database credentials securely.

Manage Access Control and Permissions

Limiting the scope of access and permissions for your database users is crucial for maintaining security. Ensure that:

- Each user has the minimum required permissions to perform their tasks.

- You periodically review user permissions and update them as necessary.

- Users who no longer require access to the database are promptly revoked.

Regularly reviewing access control and permissions can reduce the risk of unauthorized users accessing or modifying sensitive information in your database.

In conclusion, handling errors and monitoring performance are essential aspects of working with databases in Go. Proper error handling, utilizing performance monitoring tools, and securing database connections efficiently can contribute to a more reliable, secure, and performant Go application.

The techniques and best practices discussed in this article can be easily implemented in applications built on AppMaster.io, a powerful no-code platform for creating backend, web, and mobile applications that utilize Go for backend data processing.

FAQ

Go, also known as Golang, is an open-source programming language created by Google that emphasizes simplicity, efficiency, and strong support for concurrent programming.

Go's simplicity, efficient performance, easy concurrency, and strong standard library make it an excellent choice for developing web applications, APIs, and microservices that interact with databases.

To connect Go with PostgreSQL, you can use the standard 'database/sql' package in combination with a PostgreSQL driver, such as 'pq', to handle interactions with the database.

There are several third-party libraries for working with databases in Go, including GORM, sqlx, and pgx, which offer advanced features, improved performance, and more expressive query capabilities.

Connection pooling is the technique of managing a pool of open database connections, allowing applications to reuse connections efficiently. It's essential for improving the performance and scalability of database-heavy applications.

To manage transactions in Go, use the 'Begin' method provided by the 'database/sql' package. Use 'Commit' to save the transaction and 'Rollback' to undo changes if there's an error.

To secure your database connections in Go, use encrypted connections with SSL/TLS, authentication mechanisms like password hashing, and proper access control and permission management.

Use Go's error handling mechanisms, such as 'if err != nil', and performance monitoring tools like pprof or third-party libraries to track database performance and identify potential bottlenecks or issues in your code.