How Containerization Is Reshaping Software Architecture

Explore how containerization drives the adoption of microservices, offering flexibility, scalability, and maintainability.

The Emergence of Containerization

Containerization has revolutionized the way software is designed, developed, and deployed. It emerged as a solution to the challenges faced by software developers and operations teams, addressing the inefficiencies caused by inconsistent environments and configurations.

Traditional application deployment involves manually configuring the target system and installing dependencies, often leading to multiple issues, such as conflicts, scalability limitations, and unpredictable behavior. The concept of containerization can be traced back to the late 1990s and early 2000s with technologies such as FreeBSD Jails, Solaris Zones, and IBM Workload Partitions. But it wasn't until the launch of Docker in 2013 that containerization became widely popular.

Docker simplified the process of bundling applications and their dependencies into portable containers, making it easier for developers to manage and deploy applications consistently across different systems. As containerization gained traction, it propelled the shift towards microservices architecture, promoting greater flexibility and scalability in application development. This paradigm shift has profoundly impacted software architecture, encouraging modular designs and simplifying the management of complex applications with multiple components.

Understanding Containers and Their Benefits

Containers are lightweight, portable, and self-contained units that package an application and its dependencies, such as libraries, binaries, and configuration files. Containers provide a consistent environment, ensuring that an application runs the same way, regardless of the underlying infrastructure. They achieve this consistency by isolating application processes from the host operating system, eliminating potential conflicts and inconsistencies between environments. The benefits of containerization are numerous, including:

- Deployment Speed: Containers can be launched in seconds, offering rapid startup and application scaling. This is particularly important in cloud and microservices-based architectures where elasticity and responsiveness are critical.

- Portability: Containers encompass everything required to run an application, making it easy to move between environments, whether during development, testing, or production.

- Resource Efficiency: Containers share the host operating system kernel, rather than requiring a full guest operating system like virtual machines. This results in lower resource usage, reducing the overhead of running multiple instances of an application.

- Process Isolation: Containers create isolated processes that execute in their own namespace and filesystem, preventing interference with other containers or the host OS. This enhances security and stability, especially in multi-tenant and shared environments.

- Compatibility and Maintainability: By encapsulating dependencies, containers reduce the complexity of dealing with software versioning and compatibility issues, making it easier to update or roll back application components.

Containers Versus Virtual Machines

It's important to distinguish between containers and virtual machines, as they serve different purposes and have unique advantages and disadvantages. Both aim to provide isolation and consistency for applications, but they achieve it through different means.

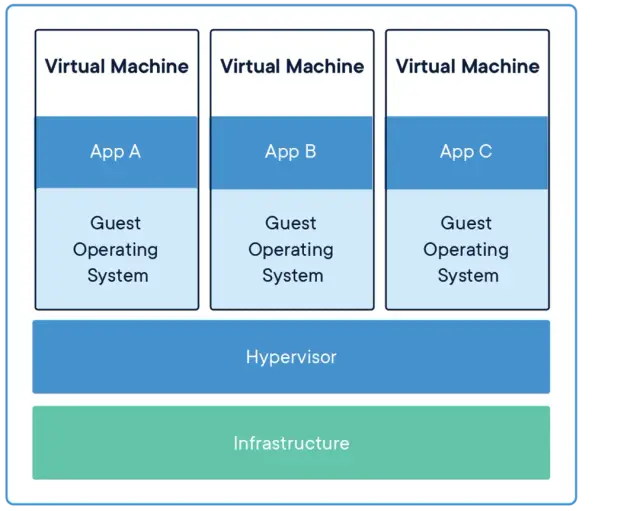

Virtual machines (VMs) are essentially emulated hardware environments, where an application, its dependencies, and a complete guest operating system are executed on virtualized resources provided by a hypervisor. A hypervisor is a software layer managing virtual machines on the host system. VMs offer strong isolation but consume significantly more resources due to the overhead of running multiple full guest operating systems.

Image Source: Docker

Containers, on the other hand, are lightweight and efficient. They share the host OS kernel and isolate the application processes within their namespace and filesystem, without needing an entire guest operating system. This results in faster startup times, lower resource usage, and higher density on the host system. The specific requirements of your applications and infrastructure should guide the choice between containers and VMs.

While VMs might be suitable for scenarios where strong isolation and entirely separate environments are necessary, containers offer more agility and resource efficiency in situations where rapid deployment and scaling are crucial. When used together, containers and virtual machines can complement each other within a larger infrastructure, delivering the best combination of isolation, flexibility, and resource efficiency where needed. For example, VMs can serve as the base layer providing security and runtime isolation, while containers enable rapid deployment and scaling of applications on top of these VMs.

The Shift to Microservices Architectures

Containerization has paved the way for the rise of microservices as a predominant software architectural pattern. Microservices entail breaking down applications into small, discrete services that communicate with one another via APIs. This approach enhances modularity, easier maintenance, and scalability, as individual services can be developed, tested, and deployed independently.

The nature of containers allows for the encapsulation of each service in its own container, providing process and resource isolation, which perfectly matches the concepts underlying microservices architecture. As a result, containerization enables rapid provisioning, efficient resource utilization, and increased flexibility in managing complex microservices-based applications.

By combining containers with microservices, software developers can achieve continuous delivery, enabling them to rapidly and reliably adapt their applications to the ever-changing requirements of modern businesses. A key advantage of containerization in microservices is the ability to scale each microservice independently. This allows for a more granular approach to resource allocation, ensuring that each service has the resources it needs to function efficiently without overprovisioning. When demand for a particular microservice increases, it can be automatically scaled without affecting other services in the application.

Impact of Containerization on Software Development

Containerization has a significant impact on software development in many areas, such as:

- Accelerated Deployment and Testing: Containers can be launched quickly due to their lightweight nature, streamlining the development and testing process. With containerization, developers can easily create and destroy entire environments within minutes, making testing various configurations and scenarios easier.

- Improved Portability and Consistency: Containers bundle the application code and its dependencies, creating an isolated and consistent environment regardless of the underlying infrastructure. This enables developers to run their applications on any system that supports containers, without worrying about compatibility issues between different operating systems or runtime environments.

- Simplified Application Management: Containers simplify the management of complex, multi-component applications by allowing each component to be packaged, configured, and deployed independently. This reduces dependencies between components, minimizes configuration drift, and makes it easier to update individual components without affecting the entire system.

- Enhanced Scalability: Containers make it easier to scale applications, as deploying additional instances is quick and consumes fewer resources than virtual machines. This allows for dynamic scaling of applications based on demand, ensuring optimal resource utilization and reduced operational costs.

- Support for DevOps and Continuous Integration/Delivery: Containerization fosters collaboration between development and operations teams, supporting DevOps methodologies. With containers, teams can build, test, and deploy applications rapidly, making the continuous integration/delivery (CI/CD) pipeline more efficient and effective.

Adapting Software Design Patterns

Containerization has also prompted the evolution of software design patterns to accommodate its characteristics and benefits. These new patterns exploit the isolation, portability, and scalability containers provide. Some notable software design patterns influenced by containerization are:

- Sidecar Pattern: In the sidecar pattern, a container is deployed alongside the primary container, providing additional functionality that supports the main application. The sidecar container can handle monitoring, logging, and configuration management tasks, allowing the primary container to focus on executing its core function. This pattern promotes separation of concerns and simplifies the main application's design.

- Ambassador Pattern: The ambassador pattern involves deploying a container that acts as a proxy between the main application container and external services. This pattern enables the abstraction of communication details, such as service discovery, load balancing, and protocol translations, making it easier for developers to reason about the main application's behavior and dependencies.

- Adapter Pattern: The adapter pattern employs a container that modifies the main application's output or input to conform to the expectations of other services or systems. This pattern provides a way to manage inconsistencies between different service interfaces without modifying the main application or service, enhancing the design's adaptability and maintainability.

Containerization has reshaped software architecture by enabling a shift towards microservices, offering increased flexibility, scalability, and maintainability. The impact of containerization on software development is evident in areas like accelerated deployment, improved portability, simplified application management, enhanced scalability, and support for DevOps.

As a result, new software design patterns have emerged to accommodate these changes and leverage the benefits provided by containerization. Containerization continues to drive innovation in software development, allowing both developers and organizations to create and manage applications more efficiently and effectively.

Container Orchestration and Deployment Tools

Container orchestration is the process of automating the deployment, scaling, and management of containers. The growing use of containerization has fueled the development of several orchestration and deployment tools to streamline containerized application management. Let's dive into some popular container orchestration and deployment tools that have shaped the way modern applications are built and run.

Kubernetes

Kubernetes is an open-source container orchestration platform, originally designed by Google, that enables the automation of container deployment, scaling, and management. It can run containerized applications across multiple clusters, providing high availability and fault-tolerant capabilities. Some of the key features of Kubernetes include self-healing, horizontal scaling, rolling updates, storage orchestration, and load balancing. Notable characteristics of Kubernetes include:

- Efficient resource utilization: Kubernetes optimizes resource usage by packing containers onto host nodes based on resource requirements.

- Flexibility and extensibility: Kubernetes supports a wide range of container runtimes, storage drivers, and network providers, ensuring maximum flexibility in container infrastructure.

- Strong developer community: Kubernetes has a large, active community, which contributes to the platform's powerful ecosystem of plugins, integrations, and innovative solutions.

Docker Swarm

Docker Swarm is a native container orchestration tool for the popular Docker container platform. It can be used to form a swarm, a group of Docker nodes that can run distributed applications using container service definitions. Docker Swarm provides ease of management, service discovery, and load balancing functionalities, making it a great choice for managing Docker containers. Some of the advantages of Docker Swarm include:

- Simplicity: Docker Swarm is designed to be simple and easy to use, requiring minimal setup and configuration.

- Integration with Docker tools: Docker Swarm works seamlessly with other Docker tools like Docker Compose and Docker Machine, making it convenient for those already familiar with the Docker ecosystem.

- Platform Agnostic: Docker Swarm can run on any operating system and infrastructure that supports Docker.

Apache Mesos

Apache Mesos is an open-source cluster management platform that can manage resources and schedule tasks across distributed computing environments. It supports both container orchestration (using tools like Marathon and Kubernetes) and native application scheduling. The key selling point of Apache Mesos is its ability to manage resources at scale, as it can handle tens of thousands of nodes in a single cluster. Critical features of Apache Mesos are:

- Scalability: Mesos is designed for large-scale systems, capable of handling massive amounts of resources and tasks.

- Unified scheduler: Mesos uses a single scheduler to manage resources for both containerized and non-containerized workloads, simplifying resource management across different application types.

- Plug-in architecture: Mesos supports pluggable scheduling modules, allowing users to customize the platform according to their needs.

Integration with Low-Code and No-Code Platforms

Low-code and no-code platforms have gained significant traction in recent years, enabling efficient application development without writing extensive amounts of code. Containerization can enhance these platforms' performance optimization, scalability, and maintainability. One such example is AppMaster.io, a powerful no-code platform that empowers users to build backend, web, and mobile applications visually.

When a user presses the 'Publish' button, AppMaster generates source code, compiles the applications, packages them into Docker containers, and deploys them to the cloud. This streamlined approach enables faster and more cost-effective application development while eliminating technical debt by regenerating applications from scratch whenever requirements are modified. By integrating containerization with low-code and no-code platforms, developers of all skill levels can benefit from a more efficient, scalable, and accessible application development process. Containerization can also enhance these platforms' capabilities by:

- Simplifying deployment: Containers package applications and their dependencies together, ensuring a consistent deployment experience across development and production environments.

- Enhancing scalability: With containerized applications, scaling out specific components independently becomes easy, allowing low-code and no-code platforms to offer more fine-grained control over application scaling.

- Reducing infrastructure complexity: Containers abstract the underlying infrastructure, making it easier for low-code and no-code platforms to manage underlying resources and integrate with various cloud providers.

The synergy between containerization and low-code or no-code platforms paves the way for a more efficient and accessible application development experience. By combining the benefits of containerization and the simplicity of these platforms, businesses can stay innovative and competitive in an ever-evolving technology industry.

FAQ

Containerization is the process of bundling an application and its dependencies into a portable container. Containers allow for consistent deployment and operation across different environments, enhancing productivity and efficiency.

Containers share the host OS kernel and isolate application processes, making them lightweight and faster to launch. Virtual machines, on the other hand, include a full guest OS for each VM, consuming more resources.

Containerization enables the shift toward microservices architecture, providing greater flexibility, scalability, and maintainability. It encourages modular design, simplifies deployment, and reduces the complexity of managing multi-component applications.

Containers offer numerous benefits, such as increased deployment speed, process isolation, resource efficiency, and ease of management. They also enable application portability, ensuring consistency across different environments.

Some popular container orchestration tools include Kubernetes, Docker Swarm, and Apache Mesos. These tools automate container deployment, scaling, and management, simplifying the process of managing complex containerized applications.

Containerization facilitates seamless integration with low-code and no-code platforms, such as AppMaster.io, allowing developers to design, deploy, and scale applications more efficiently. It streamlines the application development process, making it faster and cost-effective.

Common use cases for containerization include deploying and scaling web applications, creating development and testing environments, running batch jobs or background tasks, and supporting microservices-based application architectures.

Yes, existing applications can be migrated to a containerized environment through a process called 'containerization'. However, this may require adjustments to the application architecture, such as breaking a monolithic application into microservices.