Understanding Load Balancing in Microservices with NGINX: An In-Depth Guide

Learn how NGINX facilitates load balancing in a microservices architecture. This in-depth guide covers the basics, methods, advantages, and practical examples.

Introduction to Load Balancing with NGINX

In the realm of software development, one of the key challenges is ensuring high availability and performance across network services. This is especially crucial in a distributed system like microservices where multiple independent services continuously interact. NGINX, a widely acclaimed open-source software, has effectively addressed this challenge through load balancing.

Load balancing, as the term suggests, is about distributing network or application traffic evenly across a group of servers or endpoints. The essential aim is to ensure every resource is able to handle requests. NGINX is renowned for its capability to function as a load balancer, amongst other vital server capabilities like HTTP server, reverse proxy, email proxy, and cache server. It uses an event-driven architecture that provides low memory usage and high concurrency – ideal for handling tens of thousands of simultaneous connections.

The primary goal of load balancing with NGINX is to enhance web applications' resilient and efficient functioning. It intelligently directs client requests across multiple servers and ensures optimal resource utilization, application performance, and reliable uptime.

Microservices Architecture: A Brief Overview

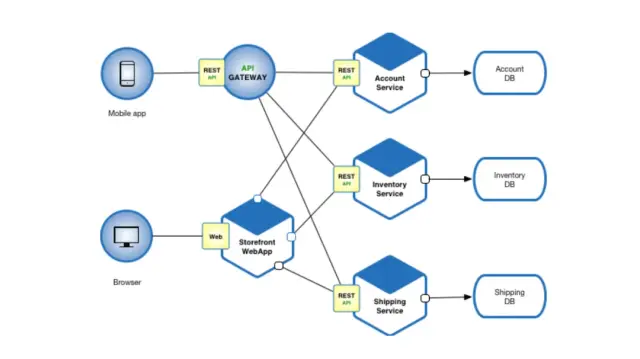

Regarding modern application development, microservices architecture has been a game-changer. Unlike monolithic architecture, where an application is built as a single unit, microservices architecture breaks down an application into small, loosely coupled modules or services. Each microservice is standalone and operates on a separate business functionality.

Microservices, embodying single-function modules with well-defined interfaces, offer numerous advantages, from independency in development cycles to flexible deployment and scaling. Managing inter-service communication can be challenging. Here comes the role of NGINX, providing efficient way of routing requests between these services using load balancing and reverse proxying.

Load balancing is a core element in a microservices architecture. No matter how independently microservices are set, some microservices eventually become more popular or are more performance critical than others. Ensuring that such services don't get overloaded and stall the entire application is where load balancing comes into the picture.

Implementing Load Balancing in Microservices with NGINX

When utilizing NGINX in a microservices configuration, the software plays the role of a reverse proxy server and load balancer. The terms reverse proxy and load balancer are often used interchangeably but serve slightly different purposes. A reverse proxy sends client requests to the appropriate backend server, and a load balancer distributes network traffic to multiple servers to ensure no single server becomes a bottleneck.

NGINX's load-balancing methods empower developers to distribute client requests across service instances more efficiently in a microservices setup. NGINX offers several load-balancing methods:

- Round Robin: This method, straightforward and undoubtedly the most commonly adopted, rotates client requests evenly across all servers. It's ideal when the servers are pretty identical in terms of resources.

- Least Connections: This method is more dynamic, routing new requests to the server with the fewest current connections. It's well-suited when server capabilities differ, helping avoid a scenario where the most capable server gets overloaded with requests.

- IP Hash: Using this method, the client's IP address gets used as a key to determine the server to handle the client's request, meaning all of a client's requests get served by the same server. It suits applications that require 'sticky sessions.'

Worth noting that, in addition to these, NGINX supports SSL/TLS termination, health checks, and more. These features add an extra layer of robustness, ensuring dependable load balancing. Developers enjoy the flexibility of choosing the most effective strategy based on the specifics of their use case.

Implementing load balancing in a microservices architecture with NGINX involves setting up an NGINX instance as a reverse proxy server. This server handles incoming client requests and forwards them to appropriate microservices instances based on the employed load balancing method. To implement this, developers usually define an upstream module in the NGINX's configuration file. This upstream module details the backend servers and the load-balancing process to use.

All this might sound complex on paper. Visual platforms like the AppMasterno-code platform simplify the process by allowing developers to generate microservices-based applications, including ready-to-use NGINX configurations, starting from blueprints - it's akin to building a Lego tower.

Whether done manually or using code-generating platforms, implementing load balancing in microservices with NGINX ensures the applications remain, responsive, and impervious to traffic spikes.

Benefits of NGINX Load Balancing

Load balancing is critical in maintaining and enhancing the high availability and reliability of applications deployed in a microservices architecture. Serving as a powerhouse for this, NGINX offers several substantial benefits:

- Scalability: NGINX effectively scales applications by balancing load across multiple instances of your application. This helps in accommodating more traffic and growing your infrastructure as needed.

- Improved Performance: NGINX ensures no single server is overwhelmed by distributing the requests evenly across all available servers. This approach improves response times and application performance.

- Highly Configurable: NGINX offers various load-balancing algorithms to suit different needs. Users can choose between IP hash, least connections, least latency, session persistence, and round-robin methods according to their requirements.

- Fault Tolerance: NGINX helps achieve fault tolerance through its health checks feature. This feature periodically checks the health of backend servers and removes any unresponsive servers from the pool, thereby preventing any outage.

Tips for Optimizing NGINX for Microservices

While NGINX is an excellent tool for load balancing in a microservices environment, a few tips can enhance its performance:

- Use Connection Pooling: Connection pooling reuses the same connections for multiple client requests, reducing connection latency and saving system resources.

- Health Checks: Regularly perform active health checks to ensure your servers work fine. Active health checks help detect failures early and prevent sending requests to failing servers.

- Monitor NGINX Performance: Monitor key indicators like CPU usage, memory usage, and the number of active connections. Regular monitoring helps you detect potential issues before they escalate.

- Optimizing Configurations: Try to optimize your NGINX configurations. Correctly adjust the NGINX buffer and timeout settings according to your server’s capacity to avoid server overload.

AppMaster and NGINX: A Powerful Combination

The capabilities of NGINX as a load balancer and server become more potent when combined with the AppMaster no-code platform. Here’s why it’s a compelling combination:

- Efficiency: By utilizing AppMaster, you can quickly generate applications with built-in support for microservices and load balancing. This capability saves considerable time and resources that would otherwise go into manual coding and setup.

- Flexibility: As users press the ‘Publish’ button, AppMaster produces source code for the applications, compiles applications, runs tests, and deploys to the cloud. This process allows users to host applications on-premises or in the cloud.

- Reduced Technical Debt: By regenerating applications from scratch whenever requirements are modified, AppMaster eliminates technical debt, improving application maintainability and future project health.

- Integration: AppMaster supports integrating any Postgresql-compatible database as a primary database, enhancing its compatibility with numerous applications.

Be it a small business or a large-scale enterprise, the duo of AppMaster and NGINX can provide a scalable, and efficient solution for managing microservices and achieving optimal load balancing.

Wrapping Up

Now that we have navigated through the world of load balancing in microservices with NGINX, it becomes evident that it is a crucial strategy for maintaining a software architecture. Irrespective of your organization's scale or the user base's size, implementing highly efficient load balancing with NGINX can significantly enhance your applications' performance, stability, and scalability. When developing your applications in a distributed, microservice-based system, the richness of NGINX's features, efficiency, and reliability make it a leading choice among developers.

Its ability to spread requests evenly across all servers helps in optimal resource usage, prevents server overloads, and allows managing high traffic volumes proficiently, thereby ensuring highly responsive and available applications. While NGINX offers vast benefits, setting up and managing load-balancing configurations can still pose challenges, especially for those new to these concepts or when dealing with complex systems. That's where the AppMaster platform can come to the rescue. AppMaster is a powerful no-code tool that effectively and efficiently designs and manages web, mobile, and backend applications.

The AppMaster's solution supports building applications based on microservices architecture and simplifies the process of setting up NGINX configurations. Beginning from blueprints, it dramatically reduces the complexity and technical know-how associated with configuring and deploying load-balancing strategies through NGINX. More importantly, AppMaster is designed to eliminate technical debt by regenerating applications from scratch whenever the blueprints or requirements are modified. This revolutionary approach ensures that your software applications remain updated with the latest requirements, stay highly maintainable, and avoid the build-up of unnecessary, obsolete code.

Combining the power of microservices architecture and NGINX load balancing with the simplicity and time-saving attributes of the AppMasterno-code platform facilitates the building of highly scalable, performant applications. This results in delivering value faster to your customers, creating more effective development workflows, and nestles a seed for a technologically empowered future. As the dynamic nature of today's tech industry continues to evolve, so does the need for scalable, and highly performant applications. By harnessing the power of load balancing in microservices using NGINX coupled with the efficiency of the AppMaster platform, organizations can supercharge their application development and deployment processes to meet modern business demands. The journey might seem complex, but remember, the beauty of technology is in its potential to simplify.

FAQ

Load balancing in microservices is the act of distributing network or application traffic across a cluster of servers, thereby improving application responsiveness and availability.

NGINX is a popular open-source web server used for reverse proxying, load balancing, and more. It's known for its high performance, stability, and rich feature set.

NGINX load balancing works by distributing the incoming requests evenly across all servers, resulting in optimal resource usage and preventing server overload.

Load balancing is fundamental in microservices architecture as it ensures that all the services can efficiently process requests without any single service becoming a performance bottleneck.

Benefits include efficient distribution of network or application traffic, robust handling of high traffic volumes, prevention of server overload, and improved application responsiveness and availability.

AppMaster eliminates technical debt by regenerating applications from scratch whenever requirements are modified. It means there's no pile-up of outdated and unnecessary code, improving maintainability and long-term project health.