OpenAI Reveals GPT-4: The Multimodal AI That Transforms Text & Image Understanding

OpenAI has introduced GPT-4, an advanced multimodal AI capable of processing text and images, unlocking numerous use cases. The advanced model is now available to ChatGPT Plus subscribers with plans for API accessibility.

OpenAI has unveiled its groundbreaking text and image understanding model, GPT-4, hailing it as the most recent benchmark of success in evolving deep learning technology. This latest iteration of AI model not only processes text, but it also comprehends images significantly surpassing the capabilities of its predecessor, GPT-3.5.

Accessible to subscribers of ChatGPT Plus with an imposed usage cap, GPT-4 charges $0.03 for 1,000 prompt tokens (about 750 words) and $0.06 for 1,000 completion tokens (once again, nearly 750 words). Developers are also welcomed to join the waiting list to access the API.

GPT-4 has garnered attention for its covert integration into various applications. It powers Microsoft’s Bing Chat, a chatbot developed in collaboration with OpenAI. Other early adopters include Stripe, which uses GPT-4 for summarizing business websites for support staff; Duolingo, which incorporates GPT-4 into its premium language learning subscription; and Morgan Stanley, which leverages GPT-4 to extract and deliver company document information to financial analysts. GPT-4 has also been integrated into Khan Academy’s automated tutoring system.

Improved over GPT-3.5, which accepted text input only, GPT-4 takes both text and image inputs, demonstrating a ‘human-level’ performance on multiple academic benchmarks. It surpassed the bottom 10% scores achieved by GPT-3.5 by achieving scores within the top 10% threshold of a simulated bar exam.

Over six months, OpenAI has refined GPT-4 using insights from an internal adversarial testing program and ChatGPT while collaborating with Microsoft in designing an Azure cloud-based supercomputer to train the advanced AI model. As a result, GPT-4 exhibits enhanced reliability, creativity, and competence in handling intricate aspects compared to its previous iteration, GPT-3.5.

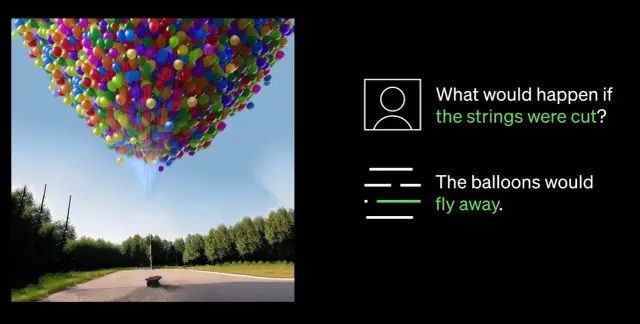

One of the most noteworthy advancements in GPT-4 is its ability to comprehend both images and text. For instance, it can interpret and caption complex images, like identifying a Lightning Cable adapter by analyzing a plugged-in iPhone image. This image understanding capacity is currently being tested with single partner Be My Eyes. Their new GPT-4 powered Virtual Volunteer feature assists users by answering questions about images, offering comprehensive analysis and practical recommendations based on the image data presented.

A paramount breakthrough in GPT-4’s capabilities is its enhanced steerability. The introduction of system messages through the new API allows developers to guide AI by providing detailed instructions on style and tasks. These instructions establish the context and boundaries for AI interactions, ensuring a symbiotic relationship between AI and human resources.

Despite substantial advancements, however, OpenAI acknowledges that GPT-4 has limitations, including reasoning errors, mistaken information, and lack of knowledge of events after September 2021. Additionally, GPT-4 may inadvertently introduce vulnerabilities in the code it produces. Nevertheless, OpenAI has made strides in refining GPT-4, making it less likely to engage with prohibited content or respond inappropriately to sensitive requests.

As the AI field continues to evolve, OpenAI remains determined to further improve GPT-4, fostering a more intelligent and efficient future powered by advanced AI technology. Companies seeking to implement AI into their workflows may consider exploring no-code platforms like AppMaster.io to integrate data-based decision-making systems into their businesses.