Microsoft Semantic Kernel SDK Bridges the Gap between LLMs and Code

Microsoft's Semantic Kernel SDK simplifies the process of integrating large language models (LLMs) like GPT into code. It offers an operating system-like behavior for LLM APIs, managing complex prompts, orchestrating operations, and ensuring focused output.

Microsoft introduces the Semantic Kernel SDK, making the integration of large language models (LLMs) such as GPT-4 into code much easier. The complexities of managing prompts, inputs, and focused outputs are simplified with this SDK, bridging the gap between the language models and developers.

The process of integrating an AI model into your code can be quite challenging, as it involves crossing a boundary between two different ways of computing. Traditional programming methods are not sufficient for interacting with LLMs. What’s needed is a higher-level abstraction that translates between the different domains, providing a way to manage context and keep outputs grounded in the source data.

A few weeks ago, Microsoft released its first LLM wrapper called Prompt Engine. Building from that, the software giant has now unveiled its more powerful C# tool, Semantic Kernel, for working with Azure OpenAI and OpenAI's APIs. This open-source tool is available on GitHub, along with several sample applications.

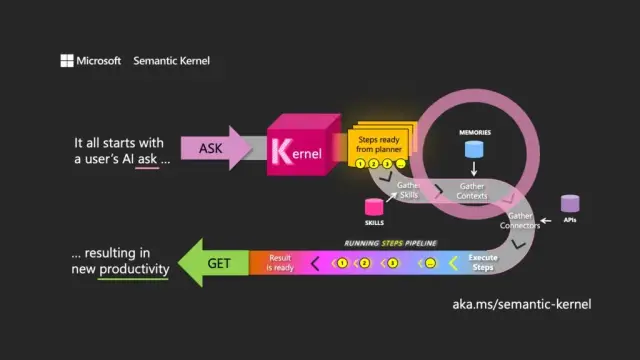

The choice of name signifies an understanding of the primary purpose of an LLM. Semantic Kernel focuses on natural language inputs and outputs by directing the model using the initial user request (the ask) to orchestrate passes through associated resources, fulfilling the request and returning a response (the get).

Semantic Kernel functions like an operating system for LLM APIs, taking inputs, processing them by working with the language model, and returning outputs. The kernel's orchestration role is essential in managing not only prompts and their associated tokens but also memories, connectors to other information services, and predefined skills that mix prompts and conventional code.

Semantic Kernel manages context through the concept of memories, working with files and key-value storage. A third option, semantic memory, treats content as vectors or embeddings, which are arrays of numbers the LLM uses to represent the meanings of texts. These embedded vectors help the underlying model maintain relevance, coherence, and reduce the likelihood of generating random output.

By using embeddings, developers can break up large prompts into blocks of text to create more focused prompts without exhausting the available tokens for a request (e.g., GPT-4 has a limit of 8,192 tokens per input).

Connectors play an important role in Semantic Kernel, allowing integration of existing APIs with LLMs. For instance, a Microsoft Graph connector can send the output of a request in an email or build a description of relationships in the organization chart. Connectors also provide a form of role-based access control to ensure that outputs are tailored to the user, based on their data.

The third main component of Semantic Kernel is skills, which are containers of functions that mix LLM prompts and conventional code, similar to Azure Functions. They can be used to chain together specialized prompts and create LLM-powered applications.

Outputs of one function can be chained to another, allowing the construction of a pipeline of functions that mix native processing and LLM operations. This way, developers can build flexible skills that can be selected and utilized as needed.

Although Semantic Kernel is a powerful tool, it requires careful thought and planning to create effective applications. By using the SDK strategically alongside native code, developers can harness the potential of LLMs, making the development process more efficient and productive. To assist with getting started, Microsoft provides a list of best practice guidelines learned from building LLM applications within its own business.

In the context of modern software development, Microsoft's Semantic Kernel SDK positions itself as a key enabler for integrating large language models in various applications. Its implementation can greatly benefit tools such as AppMaster's no-code platform and website builders, offering more flexible and efficient solutions for a wide range of users.