Rate limiting for public APIs: practical quotas and lockout flows

Rate limiting for public APIs that stops abuse without blocking real users: practical limits, per-key quotas, lockouts, and rollout tips.

What problem rate limiting is actually solving

Rate limiting for public APIs isn't about punishing users. It's a safety valve that keeps your service available when traffic gets weird, whether that "weird" is malicious or just a client bug.

"Abuse" often looks normal at first: a scraper that walks every endpoint to copy data, brute-force login attempts, token stuffing against auth routes, or a runaway client that retries the same request in a tight loop after a timeout. Sometimes nobody is attacking you at all. A mobile app update ships with a bad caching rule and suddenly every device polls your API every second.

The job is straightforward: protect uptime and control costs without blocking real users doing normal work. If your backend is metered (compute, database, email/SMS, AI calls), one noisy actor can turn into a real bill fast.

Rate limiting on its own also isn't enough. Without monitoring and clear error responses, you get silent failures, confused customers, and support tickets that sound like "your API is down" when it's really throttling.

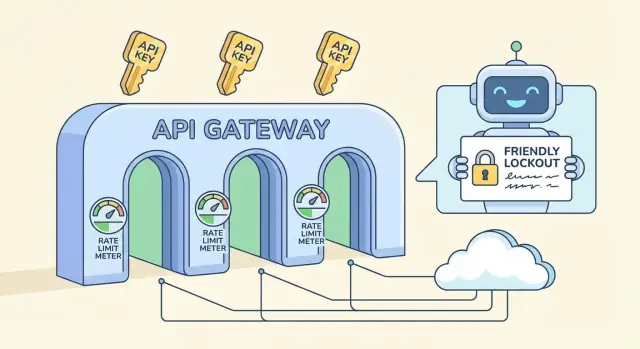

A complete guardrail usually has a few separate pieces:

- Rate limits: short-window caps (per second/minute) that stop spikes.

- Quotas: longer-window allowances (per day/month) that keep usage predictable.

- Lockouts: temporary blocks for clearly abusive patterns.

- Exceptions: allowlists for trusted integrations, internal tools, or VIP customers.

If you're building an API backend on a platform like AppMaster, these rules still matter. Even with clean, regenerated code, you'll want protective defaults so one bad client doesn't take your whole service with it.

Key terms: rate limits, quotas, throttling, and lockouts

These terms get mixed together, but they solve different problems and feel different to users.

Rate limit, quota, and concurrency: what each one means

A rate limit is a speed limit: how many requests a client can make in a short window (per second, per minute). A quota is a budget: total usage over a longer period (per day, per month). A concurrency limit caps how many requests can be in progress at once, which is useful for expensive endpoints even when the request rate looks normal.

Where you attach the limit matters:

- Per IP: simple, but punishes shared networks (offices, schools, mobile carriers).

- Per user: great for logged-in apps, but depends on reliable identity.

- Per API key: common for public APIs, clear ownership and easy to message.

- Per endpoint: useful when one route is much heavier than others.

Throttling vs blocking, and where lockouts fit

Soft throttling slows the client down (delays, lower burst capacity) so they can recover without breaking workflows. Hard blocking rejects requests immediately, usually with HTTP 429.

A lockout is stronger than a normal 429. A 429 says "try again soon." A lockout says "stop until a condition is met," such as a cool-down period, a manual review, or a key reset. Reserve lockouts for clear abuse signals (credential stuffing, aggressive scraping, repeated invalid auth), not normal traffic spikes.

If you're building an API with a tool like AppMaster, treat these as separate controls: short-window limits for bursts, longer quotas for cost, and lockouts only for repeated bad behavior.

Picking practical limits without guessing wildly

Good limits start with one goal: protect the backend while letting normal users finish their work. You don't need perfect numbers on day one. You need a safe baseline and a way to adjust it.

A simple starting point is a per-API-key limit that matches the kind of API you run:

- Low traffic: 60-300 requests per minute per key

- Medium traffic: 600-1,500 requests per minute per key

- High traffic: 3,000-10,000 requests per minute per key

Then split limits by endpoint type. Reads are usually cheaper and can take higher limits. Writes change data, often trigger extra logic, and deserve tighter caps. A common pattern is something like 1,000/min for GET routes but 100-300/min for POST/PUT/DELETE.

Also identify expensive endpoints and treat them separately. Search, report generation, exports, file uploads, and anything that hits multiple tables or runs heavy business logic should have a smaller bucket even if the rest of the API is generous. If your backend uses visual workflows (for example, Business Process flows), each added step is real work that multiplies under load.

Plan for bursts with two windows: a short window to absorb quick spikes, plus a longer window that keeps sustained usage in check. A common combo is 10 seconds plus 10 minutes. It helps real users who click fast, without giving scrapers unlimited speed.

Finally, decide what happens when the client exceeds the limit. Most public APIs return HTTP 429 with a clear retry time. If the work is safe to delay (like an export), consider queueing it instead of hard-blocking so real users still get results.

Designing per-key quotas that feel fair

Per-key quotas are usually the fairest approach because they match how customers actually use your service: one account, one API key, clear responsibility. IP-based limits are still useful, but they often punish innocent users.

Shared IPs are the big reason. A whole office may exit through one public IP, and mobile carriers can put thousands of devices behind a small pool of IPs. If you rely on per-IP caps, one noisy user can slow down everyone else. A per-key quota avoids that, and you can keep a light per-IP limit as a backstop for obvious floods.

To make quotas feel fair, tie them to customer tiers without trapping new users. A free or trial key should work for real testing, just not at a scale that hurts you. A simple pattern is generous bursts, a modest sustained rate, and a daily quota that matches the plan.

A per-key policy that stays predictable:

- Allow short bursts (page loads, batch imports), then enforce a steady rate.

- Add a daily cap per key to limit scraping and runaway loops.

- Increase limits by plan, but keep the same structure so behavior stays consistent.

- Use a lower cap for freshly created keys until they build a good history.

- Keep a small per-IP limit to catch obvious floods and misconfigured clients.

To discourage key sharing and automated signups, you don't need heavy surveillance. Start with simple checks like unusual geo changes, too many distinct IPs per hour for one key, or many new keys created from the same source. Flag and slow down first; lock out only after repeated signals.

Anonymous traffic is where stricter caps make sense. If you offer trial keys, rate-limit them tightly and require a basic verification step before raising limits. If your API powers a public form, consider a separate anonymous endpoint with its own quota so the rest of your backend stays protected.

If you build your API with a platform like AppMaster, per-key logic is easier to keep consistent because auth, business rules, and response handling live in one place.

Client-friendly responses and headers (so users can recover)

Rate limiting only works long term if clients can understand what happened and what to do next. Aim for responses that are boringly predictable: same status code, same fields, same meaning across endpoints.

When a client hits a limit, return HTTP 429 (Too Many Requests) with a clear message and a concrete wait time. The fastest win is adding Retry-After, because even simple clients can pause correctly.

A small set of headers makes limits self-explanatory. Keep names consistent and include them on both successful responses (so clients can pace themselves) and 429 responses (so clients can recover):

Retry-After: seconds to wait before retryingX-RateLimit-Limit: current allowed requests per windowX-RateLimit-Remaining: requests left in the current windowX-RateLimit-Reset: when the window resets (epoch seconds or ISO time)X-RateLimit-Policy: short text like "60 requests per 60s"

Make the error body as structured as your success responses. One common pattern is an error object with a stable code, a human-friendly message, and recovery hints.

{

"error": {

"code": "rate_limit_exceeded",

"message": "Too many requests. Please retry after 12 seconds.",

"retry_after_seconds": 12,

"limit": 60,

"remaining": 0,

"reset_at": "2026-01-25T12:34:56Z"

}

}

Tell clients how to back off when they see 429s. Exponential backoff is a good default: wait 1s, then 2s, then 4s, and cap it (for example, at 30-60 seconds). Also be explicit about when to stop retrying.

Avoid surprises near quotas. When a key is close to a cap (say 80-90% used), include a warning field or header so clients can slow down before they start failing. This matters even more when one script can hit multiple routes quickly and burn through budget faster than expected.

Step by step: a simple rollout plan for limits and quotas

A rollout works best when you treat limits as a product behavior, not a one-off firewall rule. The goal is the same throughout: protect the backend while keeping normal customers moving.

Start with a quick inventory. List every endpoint, then mark each by cost (CPU, database work, third-party calls) and risk (login, password reset, search, file upload). That prevents you from applying one blunt limit everywhere.

A rollout order that usually avoids surprises:

- Tag endpoints by cost and risk, and decide which ones need stricter rules (login, bulk export).

- Pick identity keys in priority order: API key first, then user id, and IP only as a fallback.

- Add short-window limits (per 10 seconds or per minute) to stop bursts and scripts.

- Add longer-window quotas (per hour or per day) to cap sustained usage.

- Add allowlists for trusted systems and internal tools so ops work doesn't get blocked.

Keep the first release conservative. It's easier to loosen later than to unblock angry users.

Monitor and tune, then version your policy. Track how many requests hit limits, which endpoints trigger it, and how many unique keys are affected. When you change numbers, treat it like an API change: document it, roll it out gradually, and keep old and new rules separated so you can roll back fast.

If you build your API with AppMaster, plan these rules alongside your endpoints and business logic so limits match the real cost of each workflow.

Lockout workflows that stop abuse without drama

Lockouts are the seatbelt. They should stop obvious abuse quickly, but still give normal users a clear path to recover when something goes wrong.

A calm approach is progressive penalties. Assume the client might be misconfigured, not malicious, and escalate only if the same pattern repeats.

A simple progressive ladder

Use a small set of steps that are easy to explain and easy to implement:

- Warn: tell the client they're approaching limits, and when it resets.

- Slow: add short delays or tighter per-second limits for that key.

- Temporary lockout: block for minutes (not hours) with an exact unlock time.

- Longer lockout: only after repeated bursts across multiple windows.

- Manual review: for patterns that look intentional or keep coming back.

Choosing what to lock matters. Per API key is usually fairest because it targets the caller, not everyone behind a shared network. Per account helps when users rotate keys. Per IP can help with anonymous traffic, but it causes false positives for NATs, offices, and mobile carriers. When abuse is serious, combine signals (for example, lock the key and require extra checks for that IP), but keep the blast radius small.

Make lockouts time-based with simple rules: "blocked until 14:05 UTC" and "resets after 30 minutes of good behavior." Avoid permanent bans for automated systems. A buggy client can loop and burn through limits fast, so design penalties to decay over time. A sustained low-rate period should reduce the penalty level.

If you're building your API in AppMaster, this ladder maps well to stored counters plus a Business Process that decides allow, slow, or block and writes the unlock time for the key.

For repeat offenders, keep a manual review path. Don't argue with users. Ask for request IDs, timestamps, and the API key name, then decide based on evidence.

Common mistakes that cause false positives

False positives are when your defenses block normal users. They usually happen when the rules are too simple for the way people actually use your API.

A classic mistake is one global limit for everything. If you treat a cheap read endpoint and an expensive export the same, you either overprotect the cheap one (annoying) or underprotect the expensive one (dangerous). Split limits by endpoint cost and make the heavy paths stricter.

IP-only limiting is another common trap. Many real users share one public IP (offices, schools, mobile carriers, cloud NAT). One heavy user can get everyone blocked, and it looks like random outages. Prefer per-API-key limits first, then use IP as a secondary signal for obvious abuse.

Failures can also create false positives. If your limiter store is down, "fail closed" can take your whole API down. "Fail open" can invite a spike that takes your backend down anyway. Pick a clear fallback: keep a small emergency cap at the edge, and degrade gracefully on non-critical endpoints.

Client handling matters more than most teams expect. If you return a generic 429 without a clear message, users retry harder and trigger more blocks. Always send Retry-After, and keep the error text specific ("Too many requests for this key. Try again in 30 seconds.").

The most avoidable issue is secrecy. Hidden limits feel like bugs when customers hit production load for the first time. Share a simple policy and keep it stable.

Quick checklist for avoiding false positives:

- Separate limits for expensive vs cheap endpoints

- Primary limiting by API key, not only by IP

- Defined behavior when the limiter is unavailable

- Clear 429 responses with

Retry-After - Limits documented and communicated before enforcement

If you build your API with a tool like AppMaster, this often means setting different caps per endpoint and returning consistent error payloads so clients can back off without guessing.

Monitoring and alerting that actually helps

Rate limiting only works if you can see what's happening in real time. The goal isn't to catch every spike. It's to spot patterns that turn into outages or angry users.

Start with a small set of signals that explain both volume and intent:

- Requests per minute (overall and per API key)

- 429 rate (throttled requests) and 5xx rate (backend pain)

- Repeated 401/403 bursts (bad keys, credential stuffing, misconfigured clients)

- Top endpoints by volume and by cost (slow queries, heavy exports)

- New or unexpected endpoints showing up in the top 10

To separate "bad traffic" from "we shipped something," add context to dashboards: deploy time, feature flag changes, marketing sends. If traffic jumps right after a release and the 429/5xx mix stays healthy, it's usually growth, not abuse. If the jump is concentrated in one key, one IP range, or one expensive endpoint, treat it as suspicious.

Alerts should be boring. Use thresholds plus cooldowns so you don't get paged every minute for the same event:

- 429 rate above X% for 10 minutes, notify once per hour

- 5xx above Y% for 5 minutes, page immediately

- Single key exceeds quota by Z% for 15 minutes, open an investigation

- 401/403 bursts above N/min, flag for possible abuse

When an alert fires, keep a short incident note: what changed, what you saw (top keys/endpoints), and what you adjusted (limits, caches, temporary blocks). Over time, those notes become your real playbook.

Example: you launch a new search endpoint and traffic doubles. If most calls hit that endpoint across many keys, raise the per-key quota slightly and optimize the endpoint. If one key hits export nonstop and drives latency up, cap that endpoint separately and contact the owner.

Quick checklist: sanity checks before and after launch

A good setup is boring when it works. This checklist catches the issues that usually create false positives or leave obvious gaps.

Before you release a new endpoint

Run these checks in staging and again right after launch:

- Identity: confirm the limiter keys off the right thing (API key first, then user or IP as a fallback), and that rotated keys don't inherit old penalties.

- Limits: set a default per-key quota, then adjust based on endpoint cost (cheap read vs expensive write) and expected bursts.

- Responses: return clear status and recovery info (retry timing, remaining budget, stable error code).

- Logs: record who was limited (key/user/IP), which route, what rule fired, and a request ID for support.

- Bypass: keep an emergency allowlist for your monitors and trusted integrations.

If you're building on AppMaster, treat each new API endpoint as a cost-tier decision: a simple lookup can be generous, while anything that triggers heavy business logic should start stricter.

When an incident happens (abuse or sudden traffic)

Protect the backend while letting real users recover:

- Raise caps temporarily only for the least risky routes (often reads) and watch error rates.

- Add a short allowlist for known good customers while you investigate.

- Tighten specific risky routes instead of lowering global limits.

- Turn on stronger identity (require API keys, reduce reliance on IP) to avoid blocking shared networks.

- Capture samples: top keys, top IPs, user agents, and the exact payload patterns.

Before increasing limits for a customer, check their normal request pattern, endpoint mix, and whether they can batch or add backoff. Also confirm they're not sharing one key across many apps.

Monthly, review: top limited endpoints, percent of traffic hitting limits, new high-cost routes, and whether your quotas still match real usage.

Example scenario: protecting a real public API without breaking users

Imagine you run a public API used by two apps: a customer portal (high volume, steady traffic) and an internal admin tool (low volume, but powerful actions). Both use API keys, and the portal also has a login endpoint for end users.

One afternoon, a partner ships a buggy integration. It starts retrying failed requests in a tight loop, sending 200 requests per second from a single API key. Without guardrails, that one key can crowd out everyone else.

Per-key limits contain the blast radius. The buggy key hits its per-minute cap, gets a 429 response, and the rest of your customers keep working. You might also have a separate, lower limit for expensive endpoints (like exports) so even "allowed" traffic can't overload the database.

At the same time, a brute-force login attempt starts hammering the auth endpoint. Instead of blocking the whole IP range (which can hit real users behind NAT), you slow it down and then lock it out based on behavior: too many failed attempts per account plus per IP over a short window. The attacker gets progressively longer waits, then a temporary lock.

A real customer who mistyped their password a few times can recover because your responses are clear and predictable:

- 429 with

Retry-Afterso the client knows when to try again - A short lockout window (for example, 10-15 minutes), not a permanent ban

- Consistent error messages that don't leak whether an account exists

To confirm the fix, you watch a few metrics:

- 429 rate by API key and endpoint

- Auth failure rate and lockout counts

- P95 latency and database CPU during the incident

- Number of unique keys affected (should be small)

This is what protective rate limiting looks like when it shields your backend without punishing normal users.

Next steps: put a small policy in place and iterate

You don't need a perfect model on day one. Start with a small, clear policy and improve it as you learn how real users behave.

A solid first version usually has three parts:

- A per-key baseline (requests per minute) that covers most endpoints

- Tighter caps on expensive endpoints (search, exports, file uploads, complex reports)

- Clear 429 responses with a short message that tells clients what to do next

Add lockouts only where abuse risk is high and intent is easy to infer. Signup, login, password reset, and token creation are typical candidates. Keep lockouts short at first (minutes, not days), and prefer progressive friction: slow down, then temporarily block, then require a stronger check.

Write the policy down in plain language so support can explain it without engineering help. Include what is limited (per API key, per IP, per account), the reset window, and how a customer can recover.

If you're implementing this while building a new backend, AppMaster can be a practical fit: you can create APIs, define business workflows (including counters and lockout decisions) visually, then deploy to cloud providers or export the generated source code when you need full control.