Keep exported source code in sync with clear governance rules

Learn how to keep exported source code in sync with a regenerating platform using clear ownership, safe extension points, reviews, and quick checks.

What problem you are solving (in plain terms)

When a platform regenerates your app, it can rewrite large parts of the codebase. That keeps the code clean, but it also means any manual edits inside generated files can disappear the next time you click regenerate or publish a new build.

The real goal isn't "never export code." It's keeping the visual model as the source of truth so changes stay consistent and repeatable. In AppMaster, that model includes your data schema, business processes, API endpoints, and UI screens. When the model stays correct, regeneration becomes a safe, routine action instead of a stressful event.

"Exported source code" usually means taking the generated Go backend, Vue3 web app, and Kotlin/SwiftUI mobile apps and putting them under your control. Teams export for practical reasons: security reviews, self-hosting, custom infrastructure rules, special integrations, or long-term maintenance outside the platform.

The trouble starts when the exported repo begins living its own life. Someone fixes a bug directly in generated files, adds a feature "quickly" in code, or tweaks the database layer by hand. Later, the model changes (a field rename, a new endpoint, a modified business process), the app is regenerated, and now you have drift, painful merges, or lost work.

Governance is mostly process, not tooling. It answers a few basic questions:

- Where are manual changes allowed, and where are they forbidden?

- Who can approve changes to the visual model vs the exported repo?

- How do you record why a change was done in code instead of in the model?

- What happens when regeneration conflicts with a custom extension?

When those rules are clear, regeneration stops being a risk. It becomes a dependable way to ship updates while protecting the small set of hand-written parts that truly need to exist.

Pick the source of truth and stick to it

To keep exported source code in sync with a regenerating platform, you need one clear default: where do changes live?

For platforms like AppMaster, the safest default is simple: the visual model is the source of truth. The things that define what the product does day to day should live in the model, not in the exported repository. That usually includes your data model, business logic, API endpoints, and the main UI flows.

Exported code is still useful, but treat it like a build artifact plus a small, explicitly allowed zone for work the model can't express well.

A policy most teams can follow looks like this:

- If it changes product behavior, it belongs in the visual model.

- If it's a connector to something external, it can live outside the model as a thin adapter.

- If it's a shared utility (logging tweaks, a small parsing helper), it can live outside the model as a library.

- If it's customer-specific or environment-specific configuration, keep it outside the model and inject it at deploy time.

- If it's a performance or security fix, first check whether it can be expressed in the model. If not, document the exception.

Keep the allowed zone small on purpose. The bigger it gets, the more likely regeneration will overwrite changes or create hidden drift.

Also decide who can approve exceptions. For example, only a tech lead can approve code changes that affect authentication, data validation, or core workflows. Add a simple rule for when exceptions expire, like "review after the next regeneration cycle," so temporary fixes don't quietly become permanent forks.

When exporting code makes sense (and when it does not)

Exporting source code can be the right move, but only if you're clear on why you're doing it and what you expect to change afterward. With a regenerating platform like AppMaster, the safest default is to treat the visual model as the source of truth and the export as something you can inspect, test, and deploy.

Exporting usually makes sense when teams need stronger auditability (being able to show what runs in production), self-hosting (your own cloud or on-prem rules), or special integrations that aren't covered by built-in modules. It can also help when your security team requires code scanning, or when you want a vendor-independent exit plan.

The key question is whether you need code access or code edits.

- Code access only (read-only export): audits, security review, disaster recovery, portability, explaining behavior to stakeholders.

- Code edits (editable export): adding low-level capabilities that must live in code, patching a third-party library, meeting a strict runtime constraint the model can't represent.

Read-only export is easier because you can regenerate often without worrying about overwriting hand edits.

Editable export is where teams get into trouble. Long-lived manual changes are a governance decision, not a developer preference. If you can't answer "where does this change live in a year?", you'll end up with drift: the model says one thing, production code says another.

A rule that holds up well: if the change is business logic, data shape, UI flow, or API behavior, keep it in the model. If it's a true platform gap, allow code edits only with explicit ownership, a written extension pattern, and a clear plan for how regeneration will be handled.

Design safe extension points so regen does not break you

Never treat generated files as a place to "just add one small change." Regeneration will win sooner or later.

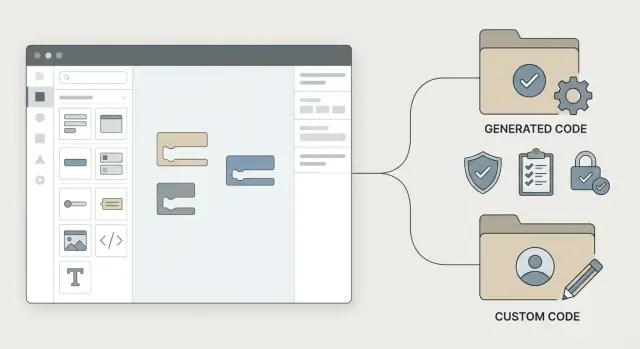

Start by drawing a hard line between what is owned by the visual model and what is owned by your team. With AppMaster, the model can regenerate backend (Go), web (Vue3), and mobile (Kotlin/SwiftUI) code, so assume anything in the generated area can be replaced at any time.

Create boundaries that are hard to cross

Make the boundary obvious in your repo and in your habits. People do the wrong thing when the right thing is inconvenient.

A few guardrails that work in practice:

- Put generated output in a dedicated folder that's treated as read-only.

- Put custom code in a separate folder with its own build entry points.

- Require custom code to call generated code only through public interfaces (not internal files).

- Add a CI check that fails if "do not edit" files changed.

- Add a header comment in generated files that clearly says they will be overwritten.

That last point matters. A clear "DO NOT EDIT: regenerated from model" message prevents well-meaning fixes that turn into future breakage.

Prefer wrappers over edits

When you need custom behavior, wrap the generated code instead of changing it. Think "adapter layer" or "thin facade" sitting between your app and the generated parts.

For example, if you export an AppMaster backend and need a custom integration to a third-party inventory system, don't edit the generated endpoint handler. Instead:

-

Keep the generated endpoint as-is.

-

Add a custom service (in your custom area) that calls the inventory API.

-

Have the generated logic call your service through a stable interface you own, such as a small package with an interface like

InventoryClient.

Regeneration can replace the endpoint implementation, but your integration code stays intact. Only the interface boundary needs to remain stable.

Use stable integration points whenever possible

Before writing custom code, check if you can attach behavior through stable hooks like APIs, webhooks, or platform modules. For example, AppMaster includes pre-built modules for Stripe payments and Telegram or email/SMS messaging. Using stable integration points reduces how often regeneration can surprise you.

Document the "do not edit" zones in one short page and enforce them with automation. Rules that live only in people's heads don't survive deadlines.

Repository structure that survives regeneration

A repo that survives regeneration makes one thing obvious at a glance: what is generated, what is owned by humans, and what is configuration. If someone can't tell in 10 seconds, overwrites and "mystery fixes" happen.

When you export from a regenerating platform like AppMaster, treat the export as a repeatable build artifact, not a one-time handoff.

A practical structure separates code by ownership and lifecycle:

generated/(orappmaster_generated/): everything that can be regenerated. No manual edits.custom/: all hand-written extensions, adapters, and glue code.config/: environment templates, deployment settings, secret placeholders (not real secrets).scripts/: automation like "regen + patch + test".docs/: a short rules page for the repo.

Naming conventions help when people are in a hurry. Use a consistent prefix for custom pieces (for example, custom_ or ext_) and mirror the generated layout only where it genuinely helps. If you feel tempted to touch a generated file "just this once," stop and move that change into custom/ or into an agreed extension point.

Branching should reflect the same separation. Many teams keep two kinds of work visible: model-driven changes (visual model updates that will regenerate code) and custom-code changes (extensions and integrations). Even in a single repository, requiring PR labels or branch naming like model/* and custom/* makes reviews clearer.

For releases, make "fresh regeneration" non-negotiable. The release candidate should start by regenerating into generated/, reapplying any scripted patches, then running tests. If it can't be rebuilt from scratch, the repo is already drifting.

Step by step workflow to keep model and code aligned

Treat every export like a small release: regenerate, verify, reapply only what is safe, then lock it in with a clear record. This keeps the visual model as the source of truth while still allowing carefully controlled custom work.

A workflow that holds up well:

- Regenerate from the latest model: confirm the visual model is up to date (data schema, business logic, UI). Regenerate and export from that exact version.

- Do a clean build and quick smoke test: build from a clean state and run a basic "does it start" check. Hit a health endpoint for backend and load the main screen for web.

- Reapply custom code only through approved extension points: avoid copying edits back into generated files. Put custom behavior in a separate module, wrapper, or hook designed to survive regeneration.

- Run automated checks and compare key outputs: run tests, then compare what matters: API contracts, database migrations, and quick UI checks of key screens.

- Tag the release and record what changed: write a short note separating model changes (schema, logic, UI) from custom changes (extensions, integrations, configs).

If something breaks after regeneration, fix it in the model first whenever possible. Choose custom code only when the model can't express the requirement, and keep that code isolated so the next regen doesn't erase it.

Governance rules: roles, approvals, and change control

If your platform can regenerate code (like AppMaster), governance is what prevents lost work. Without clear ownership and a simple approval path, teams edit whatever is closest, and regeneration turns into a recurring surprise.

Name a few owners. You don't need a committee, but you do need clarity.

- Model maintainer: owns the visual model and keeps it as the source of truth for data, APIs, and core logic.

- Custom code maintainer: owns hand-written extensions and the safe extension boundaries.

- Release owner: coordinates versioning, regeneration timing, and what goes to production.

Make reviews non-negotiable for risky areas. Any custom code that touches integrations (payments, messaging, external APIs) or security (auth, roles, secrets, data access) should require review by the custom code maintainer plus one additional reviewer. This is less about style and more about preventing drift that's painful to unwind.

For change control, use a small change request anyone can fill in. Keep it quick enough that people actually use it.

- What changed (model, generated code settings, or custom extension)

- Why it changed (user need or incident)

- Risk (what could break, who is affected)

- Rollback plan (how to undo safely)

- How to verify (one or two checks)

Set a rule for urgent fixes. If a hotfix must be applied directly to exported code, schedule the work to recreate the same change in the visual model (or redesign the extension point) within a fixed window, such as 1 to 3 business days. That one rule often determines whether an exception stays temporary or becomes permanent drift.

Common mistakes that cause overwrites and drift

Most overwrite problems start as a reasonable shortcut: "I'll just change this one file." With a regenerating platform like AppMaster, that shortcut usually turns into rework because the next export regenerates the same files.

The patterns that create drift

The most common cause is editing generated code because it feels faster in the moment. It works until the next regeneration, when the patch disappears or conflicts with new output.

Another frequent issue is multiple people adding custom code without a clear boundary. If one team adds a "temporary" helper inside generated folders and another adds a different helper in the same area, you can no longer regenerate reliably or review changes cleanly.

Drift also happens when releases skip regeneration because it feels risky. Then the visual model changes, but production runs code from an old export. After a few cycles, nobody is sure what the app really does.

A quieter mistake is failing to record which model version produced which export. Without a simple tag or release note, you can't answer basic questions like "Is this API behavior coming from the model or a custom patch?"

A quick example

A developer notices a missing validation rule and edits a generated Go handler directly to block empty values. It passes tests and ships. Two weeks later, the team updates an AppMaster Business Process and exports again. The handler is regenerated, the validation is gone, and the bug returns.

Early warning signs to watch for:

- Custom commits landing inside generated directories

- No written rule for where extensions live

- "We can't regenerate this release" becoming normal

- Releases that don't note the model version used

- Fixes that exist only in code, not in the visual model

Quality checks that catch drift early

Treat every regeneration like a small release. You're not only checking that the app still runs. You're checking that the visual model (for example, your AppMaster Data Designer and Business Process Editor) still matches what your repo deploys.

Start with a minimal test suite that mirrors real user behavior. Keep it small so it runs on every change, but make sure it covers the flows that make money or cause support tickets. For an internal ops tool, that might be: sign in, create a record, approve it, and see it in a report.

A few focused checks that are easy to repeat:

- Smoke tests for the top 3 to 5 user flows (web and mobile if you ship both)

- Contract checks for key APIs (request/response shape) and critical integrations like Stripe or Telegram

- Diff review after export that focuses on custom folders, not generated areas

- A rollback drill: confirm you can redeploy the last known good build quickly

- Version logging: model version, export date, and the commit tag that deployed

Contract checks catch "looks fine in UI" problems. Example: a regenerated endpoint still exists, but a field type changed from integer to string, breaking a downstream billing call.

For diff review, keep a simple rule: if a file is in a generated directory, you don't hand-edit it. Reviewers should ignore noisy churn and focus on what you own (custom modules, adapters, integration wrappers).

Write down a rollback plan before you need it. If regeneration introduces a breaking change, you should know who can approve the rollback, where the last stable artifact is, and which model version produced it.

Example: adding a custom integration without losing it on regen

Say your team builds a customer portal in AppMaster but needs a custom messaging integration that isn't covered by built-in modules (for example, a niche SMS provider). You export the source code to add the provider SDK and handle a few edge cases.

The rule that prevents pain later is simple: keep the visual model as the source of truth for data, API endpoints, and the core flow. Put the custom provider code in an adapter layer that the generated code calls, but does not own.

A clean split looks like this:

- Visual model (AppMaster): database fields, API endpoints, authentication rules, and the business process that decides when to send a message

- Adapter layer (hand-written): the provider client, request signing, retries, and mapping provider errors into a small, stable set of app errors

- Thin boundary: one interface like

SendMessage(to, text, metadata)that the business process triggers

Week to week, regeneration becomes boring, which is the goal. On Monday, a product change adds a new message type and a field in PostgreSQL. You update the AppMaster model and regenerate. The generated backend code changes, but the adapter layer does not. If the interface needs a new parameter, you change it once, then update the single call site at the agreed boundary.

Reviews and tests help you avoid relying on tribal knowledge. A good minimum is:

- A check that no one edited generated folders directly

- Unit tests for the adapter (happy path, provider timeout, invalid number)

- An integration test that runs after regeneration and confirms the message is sent

Write a short integration card for the next person: what the adapter does, where it lives, how to rotate credentials, how to run tests, and what to change when the visual model adds new fields.

Next steps: a practical rollout plan (with a light tool choice note)

Start small and write it down. A one-page policy is enough if it answers two questions: what is allowed to change in the repo, and what must change in the visual model. Add a simple boundary diagram (even a screenshot) showing which folders are generated and which are yours.

Then pilot the workflow on one real feature. Pick something valuable but contained, like adding a webhook, a small admin screen, or a new approval step.

A practical rollout plan:

- Write the policy and boundary diagram, and store them next to the repo README.

- Choose one pilot feature and run it end to end: model change, export, review, deploy.

- Schedule a recurring regen drill (monthly works) where you regenerate on purpose and confirm nothing important gets overwritten.

- Add a simple change gate: no merge if the visual model change isn't referenced (ticket, note, or commit message).

- After two successful drills, apply the same rules to the next team and the next app.

Tool choice note: if you use AppMaster, treat the visual model as the default place for data, APIs, and business logic. Use exported code for deployment needs (your cloud, your policies) or carefully controlled extensions that live in clearly separated areas.

If you're building with AppMaster on appmaster.io, a good habit is to practice on a small no-code project first: create the core application logic in the visual editors, export, regenerate, and prove your boundaries hold before you scale to bigger systems.